Gradient boosting python

Please cite us if you use the software. Go to the end to download the full example code or to run this example in your browser via JupyterLite or Binder.

It takes more than just making predictions and fitting models for machine learning algorithms to become increasingly accurate. Feature engineering and ensemble techniques have been used by most successful models in the business or competitions to improve their performance. Compared to Feature Engineering, these strategies are simpler to use, which is why they have gained popularity. Gradient Boosting is a functional gradient algorithm that repeatedly selects a function that leads in the direction of a weak hypothesis or negative gradient so that it can minimize a loss function. Gradient boosting classifier combines several weak learning models to produce a powerful predicting model.

Gradient boosting python

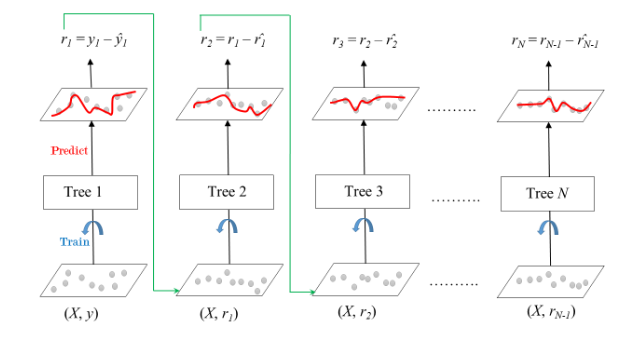

Gradient Boosting is a popular boosting algorithm in machine learning used for classification and regression tasks. Boosting is one kind of ensemble Learning method which trains the model sequentially and each new model tries to correct the previous model. It combines several weak learners into strong learners. There is two most popular boosting algorithm i. Gradient Boosting is a powerful boosting algorithm that combines several weak learners into strong learners, in which each new model is trained to minimize the loss function such as mean squared error or cross-entropy of the previous model using gradient descent. In each iteration, the algorithm computes the gradient of the loss function with respect to the predictions of the current ensemble and then trains a new weak model to minimize this gradient. The predictions of the new model are then added to the ensemble, and the process is repeated until a stopping criterion is met. In contrast to AdaBoost , the weights of the training instances are not tweaked, instead, each predictor is trained using the residual errors of the predecessor as labels. The below diagram explains how gradient-boosted trees are trained for regression problems. The ensemble consists of M trees. Tree1 is trained using the feature matrix X and the labels y. The predictions labeled y1 hat are used to determine the training set residual errors r1.

Best nodes are defined as relative reduction in impurity. This algorithm builds an additive model in a forward stage-wise fashion; it allows for the optimization of arbitrary differentiable loss functions, gradient boosting python. You should be getting closer to your final model with each iteration.

Please cite us if you use the software. This algorithm builds an additive model in a forward stage-wise fashion; it allows for the optimization of arbitrary differentiable loss functions. Binary classification is a special case where only a single regression tree is induced. Read more in the User Guide. The loss function to be optimized.

Please cite us if you use the software. This algorithm builds an additive model in a forward stage-wise fashion; it allows for the optimization of arbitrary differentiable loss functions. Binary classification is a special case where only a single regression tree is induced. Read more in the User Guide. The loss function to be optimized.

Gradient boosting python

Please cite us if you use the software. Two very famous examples of ensemble methods are gradient-boosted trees and random forests. More generally, ensemble models can be applied to any base learner beyond trees, in averaging methods such as Bagging methods , model stacking , or Voting , or in boosting, as AdaBoost. Gradient-boosted trees.

Motel sabana grande

AdaBoostClassifier A meta-estimator that begins by fitting a classifier on the original dataset and then fits additional copies of the classifier on the same dataset where the weights of incorrectly classified instances are adjusted such that subsequent classifiers focus more on difficult cases. Please Login to comment XGBoostis a more regulated version of gradient boosting. We will obtain the results from GradientBoostingRegressor with least squares loss and regression trees of depth 4. They are different from one another by two key factors. For this example, the impurity-based and permutation methods identify the same 2 strongly predictive features but not in the same order. By default, no pruning is performed. The mean squared error MSE on test set: Save Article Save. Gradient Boosted Trees for Regression. See the Glossary.

Gradient boosting classifiers are a group of machine learning algorithms that combine many weak learning models together to create a strong predictive model.

Early stopping in Gradient Boosting. They are different from one another by two key factors. Improved By :. For each datapoint x in X and for each tree in the ensemble, return the index of the leaf x ends up in each estimator. Tree2 is then trained using the feature matrix X and the residual errors r1 of Tree1 as labels. Campus Experiences. Please see User Guide on how the routing mechanism works. In addition, it controls the random permutation of the features at each split see Notes for more details. Choose the right program tailored to your needs and become a data wizard. Additive Model This is how the trees are added incrementally, iteratively, and sequentially. The process is repeated until all the M trees forming the ensemble are trained.

Bravo, your idea simply excellent

You are absolutely right. In it something is also to me it seems it is excellent idea. I agree with you.