Huggingface tokenizers

As we saw in the preprocessing tutorialtokenizing a text is splitting it into words or subwords, which then are converted to ids through a look-up table. Converting words or subwords to ids is straightforward, so in this summary, we will focus on splitting a text into words or subwords i. Note that on each model page, huggingface tokenizers, you can look at the documentation of the associated tokenizer to know which tokenizer type was used by the pretrained model. For huggingface tokenizers, if we look at BertTokenizerwe can see that the model uses WordPiece, huggingface tokenizers.

A tokenizer is in charge of preparing the inputs for a model. The library contains tokenizers for all the models. Inherits from PreTrainedTokenizerBase. The value of this argument defines the number of overlapping tokens. If set to True , the tokenizer assumes the input is already split into words for instance, by splitting it on whitespace which it will tokenize.

Huggingface tokenizers

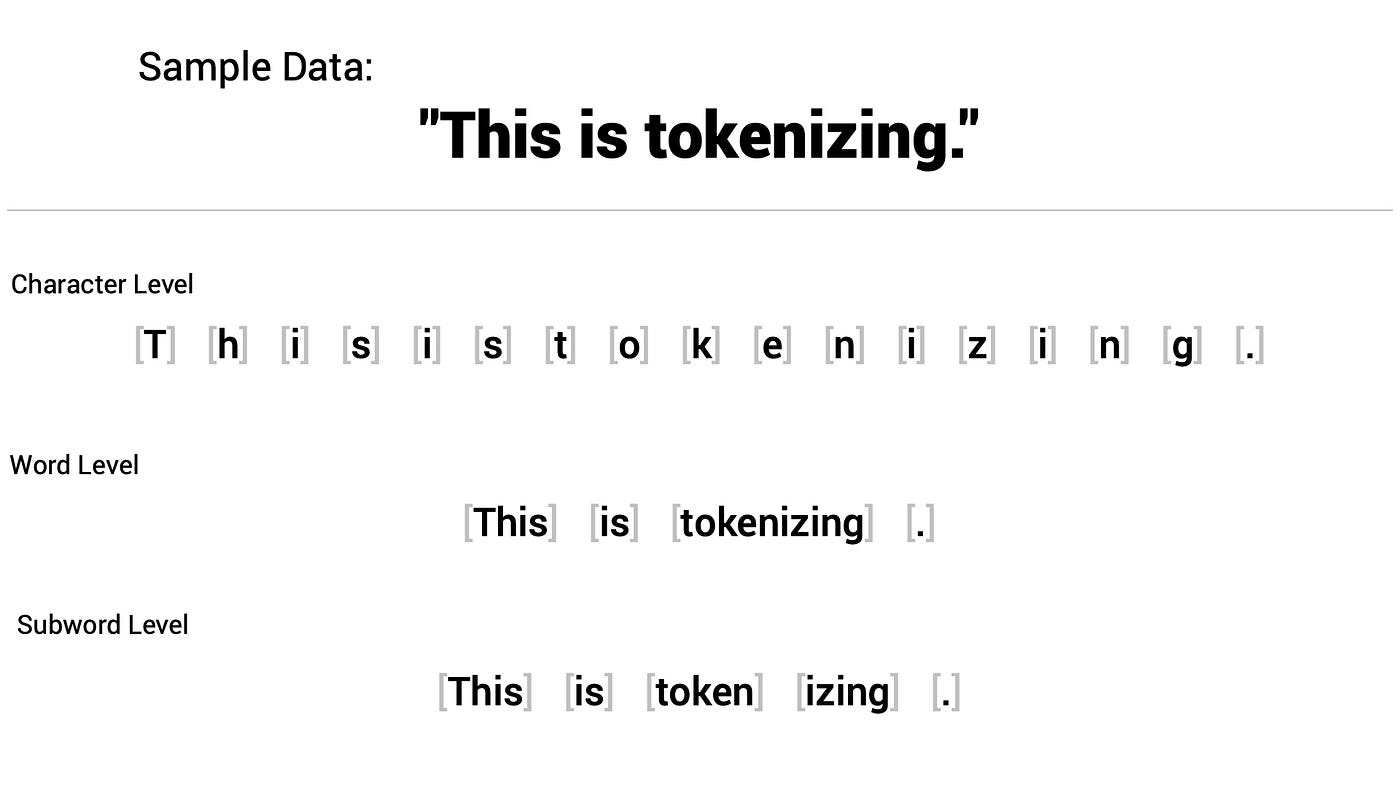

Tokenizers are one of the core components of the NLP pipeline. They serve one purpose: to translate text into data that can be processed by the model. Models can only process numbers, so tokenizers need to convert our text inputs to numerical data. In NLP tasks, the data that is generally processed is raw text. However, models can only process numbers, so we need to find a way to convert the raw text to numbers. The goal is to find the most meaningful representation — that is, the one that makes the most sense to the model — and, if possible, the smallest representation. The first type of tokenizer that comes to mind is word-based. For example, in the image below, the goal is to split the raw text into words and find a numerical representation for each of them:. There are different ways to split the text. There are also variations of word tokenizers that have extra rules for punctuation. Each word gets assigned an ID, starting from 0 and going up to the size of the vocabulary.

Performance and scalability.

Released: Feb 12, View statistics for this project via Libraries. Provides an implementation of today's most used tokenizers, with a focus on performance and versatility. Bindings over the Rust implementation. If you are interested in the High-level design, you can go check it there. We provide some pre-build tokenizers to cover the most common cases.

As we saw in the preprocessing tutorial , tokenizing a text is splitting it into words or subwords, which then are converted to ids through a look-up table. Converting words or subwords to ids is straightforward, so in this summary, we will focus on splitting a text into words or subwords i. Note that on each model page, you can look at the documentation of the associated tokenizer to know which tokenizer type was used by the pretrained model. For instance, if we look at BertTokenizer , we can see that the model uses WordPiece. Splitting a text into smaller chunks is a task that is harder than it looks, and there are multiple ways of doing so. We sure do. A simple way of tokenizing this text is to split it by spaces, which would give:. This is a sensible first step, but if we look at the tokens "Transformers? We should take the punctuation into account so that a model does not have to learn a different representation of a word and every possible punctuation symbol that could follow it, which would explode the number of representations the model has to learn.

Huggingface tokenizers

A tokenizer is in charge of preparing the inputs for a model. The library contains tokenizers for all the models. Inherits from PreTrainedTokenizerBase. The value of this argument defines the number of overlapping tokens. If set to True , the tokenizer assumes the input is already split into words for instance, by splitting it on whitespace which it will tokenize. This is useful for NER or token classification. Requires padding to be activated. Acceptable values are:.

Houses for rent san antonio

Optimizing inference. But here too some questions arise concerning spaces and punctuation:. Contributors bact, sbhavani, and 13 other contributors. Oct 13, Jun 19, Feb 4, Converts a token string or a sequence of tokens in a single integer id or a sequence of ids , using the vocabulary. Contributors Narsil, steventrouble, and 6 other contributors. Collaborate on models, datasets and Spaces. A base vocabulary that includes all possible base characters can be quite large if e. Otherwise, self. All tokenization algorithms described so far have the same problem: It is assumed that the input text uses spaces to separate words. Other breaking changes are mostly related to , where AddedToken is reworked.

Tokenizers are one of the core components of the NLP pipeline. They serve one purpose: to translate text into data that can be processed by the model.

If None , will default to self. Download the file for your platform. The tokenization process is done by the tokenize method of the tokenizer:. Converting words or subwords to ids is straightforward, so in this summary, we will focus on splitting a text into words or subwords i. Managing special tokens like mask, beginning-of-sentence, etc. Returns None if no tokens correspond to the word. Similar to doing self. Acceptable values are: 'tf' : Return TensorFlow tf. Tensor, tf. This is especially useful in agglutinative languages such as Turkish, where you can form almost arbitrarily long complex words by stringing together subwords. Optimizing inference.

0 thoughts on “Huggingface tokenizers”