Io confluent kafka serializers kafkaavroserializer

You are viewing documentation for an older version of Confluent Platform.

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. Whichever method you choose for your application, the most important factor is to ensure that your application is coordinating with Schema Registry to manage schemas and guarantee data compatibility. There are two ways to interact with Kafka: using a native client for your language combined with serializers compatible with Schema Registry, or using the REST Proxy. Most commonly you will use the serializers if your application is developed in a language with supported serializers, whereas you would use the REST Proxy for applications written in other languages. Java applications can use the standard Kafka producers and consumers, but will substitute the default ByteArraySerializer with io. KafkaAvroSerializer and the equivalent deserializer , allowing Avro data to be passed into the producer directly and allowing the consumer to deserialize and return Avro data.

Io confluent kafka serializers kafkaavroserializer

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. The Confluent Schema Registry based Avro serializer, by design, does not include the message schema; but rather, includes the schema ID in addition to a magic byte followed by the normal binary encoding of the data itself. You can choose whether or not to embed a schema inline; allowing for cases where you may want to communicate the schema offline, with headers, or some other way. This is in contrast to other systems, such as Hadoop, that always include the schema with the message data. To learn more, see Wire format. Typically, IndexedRecord is used for the value of the Kafka message. If used, the key of the Kafka message is often one of the primitive types mentioned above. When sending a message to a topic t , the Avro schema for the key and the value will be automatically registered in Schema Registry under the subject t-key and t-value , respectively, if the compatibility test passes. The only exception is that the null type is never registered in Schema Registry. In the following example, a message is sent with a key of type string and a value of type Avro record to Kafka. A SerializationException may occur during the send call, if the data is not well formed. The examples below use the default hostname and port for the Kafka bootstrap server localhost and Schema Registry localhost The avro-maven-plugin generated code adds Java-specific properties such as "avro. You can override this by setting avro.

The generic types for key and value should be Object.

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. Support for these new serialization formats is not limited to Schema Registry, but provided throughout Confluent Platform. Additionally, Schema Registry is extensible to support adding custom schema formats as schema plugins. The serializers can automatically register schemas when serializing a Protobuf message or a JSON-serializable object. The Protobuf serializer can recursively register all imported schemas,. The serializers and deserializers are available in multiple languages, including Java,. NET and Python.

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. The Confluent Schema Registry based Avro serializer, by design, does not include the message schema; but rather, includes the schema ID in addition to a magic byte followed by the normal binary encoding of the data itself. You can choose whether or not to embed a schema inline; allowing for cases where you may want to communicate the schema offline, with headers, or some other way. This is in contrast to other systems, such as Hadoop, that always include the schema with the message data. To learn more, see Wire format. Typically, IndexedRecord is used for the value of the Kafka message. If used, the key of the Kafka message is often one of the primitive types mentioned above.

Io confluent kafka serializers kafkaavroserializer

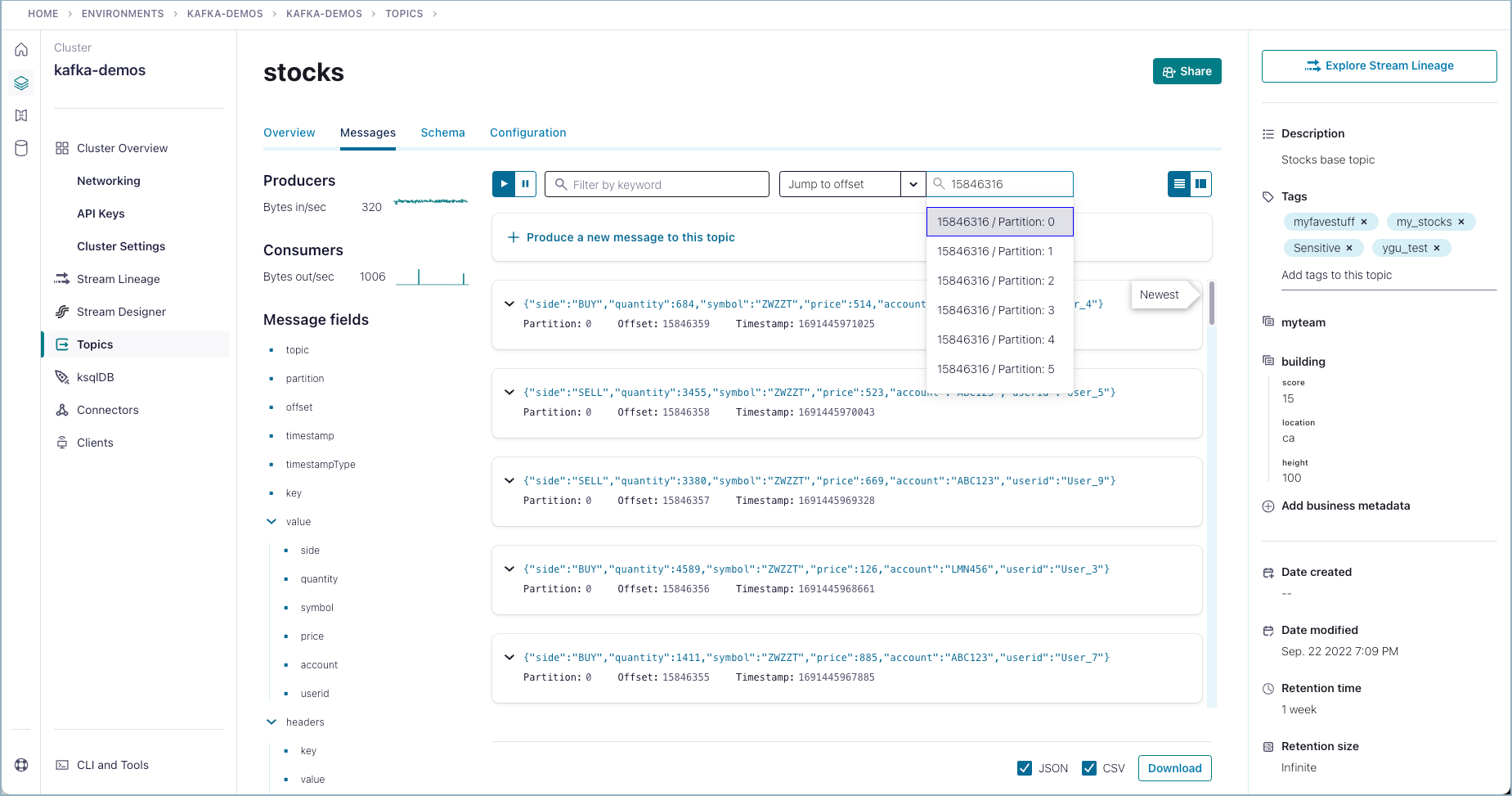

What is the simplest way to write messages to and read messages from Kafka, using de serializers and Schema Registry? Next, create the following docker-compose. Your first step is to create a topic to produce to and consume from. Use the following command to create the topic:. We are going to use Schema Registry to control our record format. The first step is creating a schema definition which we will use when producing new records. From the same terminal you used to create the topic above, run the following command to open a terminal on the broker container:. To produce your first record into Kafka, open another terminal window and run the following command to open a second shell on the broker container:.

Apple store imei check

When registering a schema, you must provide the associated references, if any. Very significant, compatibility-affecting changes will guarantee at least 1 major release of warning and 2 major releases before an incompatible change will be made. This is critical because the serialization format affects how keys are mapped across partitions. The serializer will attempt to register the schema with Schema Registry by deriving a schema for the object passed to the client for serialization. The Protobuf serializer can recursively register all imported schemas,. Note The full class names for the above strategies consist of the strategy name prefixed by io. The following schema formats are supported out-of-the box with Confluent Platform, with serializers, deserializers, and command line tools available for each format:. The subject name strategy must be configured separately in the clients. In most cases, you can use the serializers and formatter directly and not worry about the details of how messages are mapped to bytes. Typically the referenced schemas would be registered first, then their subjects and versions can be used when registering the schema that references them. In another shell, use curl commands to examine the schema that was registered with Schema Registry. Schema Registry supports ability to authenticate requests using Basic Auth headers. Within the version specified by the magic byte, the format will never change in any backwards-incompatible way.

Register Now. To put real-time data to work, event streaming applications rely on stream processing, Kafka Connect allows developers to capture events from end systems.. By leveraging Kafka Streams API as well, developers can build pipelines that both ingest and transform streaming data for application consumption, designed with data stream format and serialization in mind.

GenericRecord; import java. This brief overview should get you started, and you can find more detailed information in the Formats, Serializers, and Deserializers section of the Schema Registry documentation. Paths; import java. For the latest, click here. Schema Registry supports arbitrary schema types. New Hybrid and Multicloud Architecture. In this case, it is more useful to keep an ordered sequence of related messages together that play a part in a chain of events, regardless of topic names. Current Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. A SerializationException may occur during the send call, if the data is not well formed. To limit the number of messages, you can add a value for --max-messages such as 5 in the example:. GenericRecord; import org.

It agree, very good message