Jobs databricks

Send us feedback. To learn about configuration options for jobs and how to edit your existing jobs, jobs databricks Configure settings for Databricks jobs. To learn how to manage and monitor job runs, see View and manage job runs.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. To learn about configuration options for jobs and how to edit your existing jobs, see Configure settings for Azure Databricks jobs. To learn how to manage and monitor job runs, see View and manage job runs. To create your first workflow with an Azure Databricks job, see the quickstart. The Tasks tab appears with the create task dialog along with the Job details side panel containing job-level settings. In the Type drop-down menu, select the type of task to run. See Task type options.

Jobs databricks

.

Important You should not create jobs with jobs databricks dependencies when using the Run Job task or jobs that nest more than three Run Job tasks.

.

Thousands of Databricks customers use Databricks Workflows every day to orchestrate business-critical workloads on the Databricks Lakehouse Platform. A great way to simplify those critical workloads is through modular orchestration. This is now possible through our new task type, Run Job , which allows Workflows users to call a previously defined job as a task. Modular orchestrations allow for splitting a DAG up by organizational boundaries, enabling different teams in an organization to work together on different parts of a workflow. Child job ownership across different teams extends to testing and updates, making the parent workflows more reliable. Modular orchestrations also offer reusability. When several workflows have common steps, it makes sense to define those steps in a job once and then reuse that as a child job in different parent workflows. By using parameters, reused tasks can be made more flexible to fit the needs of different parent workflows. Reusing jobs reduces the maintenance burden of workflows, ensures updates and bug fixes occur in one place and simplifies complex workflows.

Jobs databricks

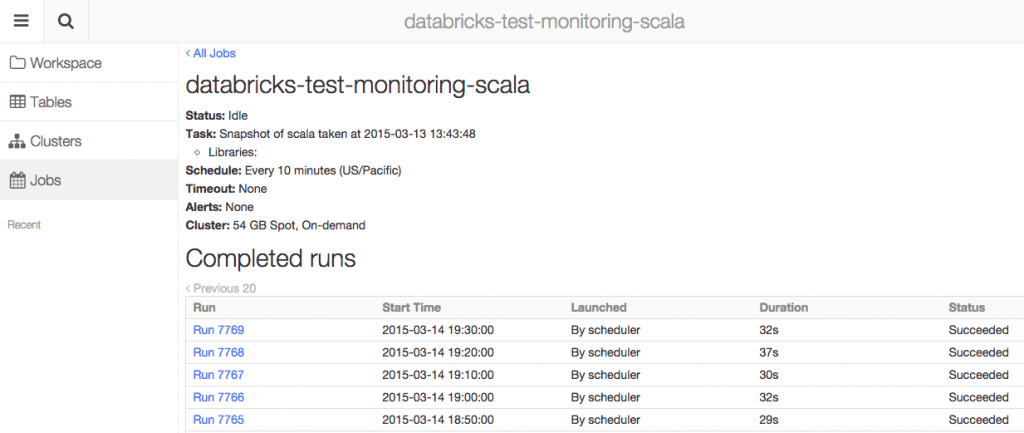

Send us feedback. Databricks Workflows orchestrates data processing, machine learning, and analytics pipelines on the Databricks Data Intelligence Platform. Workflows has fully managed orchestration services integrated with the Databricks platform, including Databricks Jobs to run non-interactive code in your Databricks workspace and Delta Live Tables to build reliable and maintainable ETL pipelines. To learn more about the benefits of orchestrating your workflows with the Databricks platform, see Databricks Workflows. Run a Delta Live Tables pipeline that ingests raw clickstream data from cloud storage, cleans and prepares the data, sessionizes the data, and persists the final sessionized data set to Delta Lake. Run a Delta Live Tables pipeline that ingests order data from cloud storage, cleans and transforms the data for processing, and persist the final data set to Delta Lake. A Databricks job is a way to run your data processing and analysis applications in a Databricks workspace. Your job can consist of a single task or can be a large, multi-task workflow with complex dependencies. Databricks manages the task orchestration, cluster management, monitoring, and error reporting for all of your jobs. You can run your jobs immediately, periodically through an easy-to-use scheduling system, whenever new files arrive in an external location, or continuously to ensure an instance of the job is always running.

50usd

You can override or add additional parameters when you manually run a task using the Run a job with different parameters option. See Re-run failed and skipped tasks. To learn more about triggered and continuous pipelines, see Continuous vs. For more information, see Roles for managing service principals and Jobs access control. You can pass parameters for your task. These strings are passed as arguments and can be read as positional arguments or parsed using the argparse module in Python. You can also configure a cluster for each task when you create or edit a task. You can also configure a cluster for each task when you create or edit a task. One of these libraries must contain the main class. Total notebook cell output the combined output of all notebook cells is subject to a 20MB size limit. You can quickly create a new job by cloning an existing job. If your job runs SQL queries using the SQL task, the identity used to run the queries is determined by the sharing settings of each query, even if the job runs as a service principal. See Configure dependent libraries. Configure the cluster where the task runs. To trigger a job run when new files arrive in a Unity Catalog external location or volume, use a file arrival trigger.

Send us feedback. When you run a Databricks job, the tasks configured as part of the job run on Databricks compute, either a cluster or a SQL warehouse depending on the task type.

See Use a notebook from a remote Git repository. By default, jobs run as the identity of the job owner. To prevent runs of a job from being skipped because of concurrency limits, you can enable queueing for the job. In the Type drop-down menu, select the type of task to run. This browser is no longer supported. Run Job : In the Job drop-down menu, select a job to be run by the task. These strings are passed as arguments to the main method of the main class. Run Job : Enter the key and value of each job parameter to pass to the job. Help Center Documentation Knowledge Base. To access information about the current task, such as the task name, or pass context about the current run between job tasks, such as the start time of the job or the identifier of the current job run, use dynamic value references. To see an example of reading arguments in a Python script packaged in a Python wheel, see Use a Python wheel in a Databricks job. Tip You can perform a test run of a job with a notebook task by clicking Run Now. You can also configure a cluster for each task when you create or edit a task. Select the task to clone.

At you a uneasy choice

You are mistaken. Let's discuss. Write to me in PM, we will communicate.

I believe, that always there is a possibility.