Keras lstm

Note: this post is from See this tutorial for an up-to-date version of the code used here.

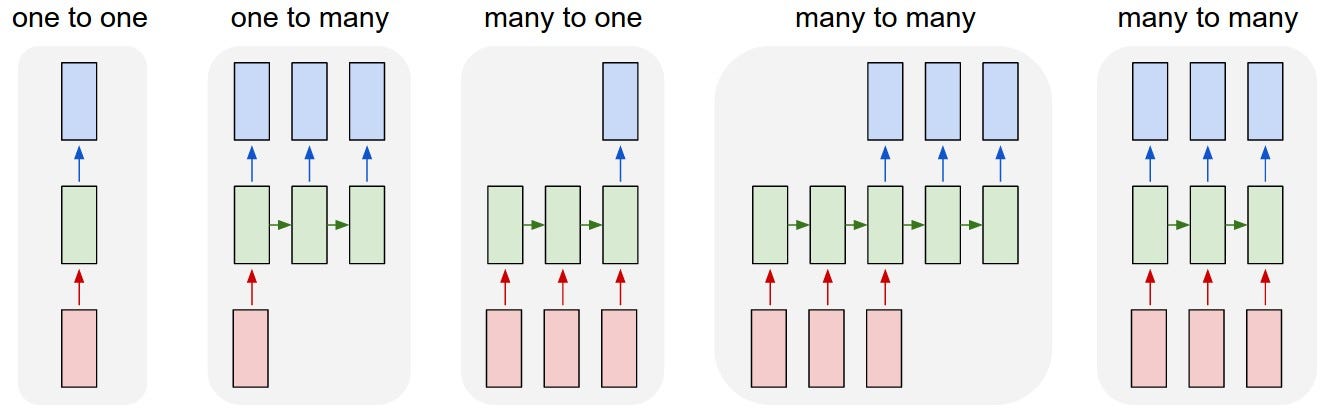

Login Signup. Ayush Thakur. There are principally the four modes to run a recurrent neural network RNN. One-to-One is straight-forward enough, but let's look at the others:. LSTMs can be used for a multitude of deep learning tasks using different modes. We will go through each of these modes along with its use case and code snippet in Keras.

Keras lstm

I am using Keras LSTM to predict the future target values a regression problem and not classification. I created the lags for the 7 columns target and the other 6 features making 14 lags for each with 1 as lag interval. I then used the column aggregator node to create a list containing the 98 values 14 lags x 7 features. And I am not shuffling the data before each epoch because I would like the LSTM to find dependencies between the sequences. I am still trying to tune the Network using maybe different optimizer and activation functions and considering different number of units for the LSTM layer. Right now I am using only one dataset of many that are available, for the same experiment but conducted in different locations. Basically I have other datasets with rows and 7 columns target column and 6 features. I still cannot figure out how to implement it, and how would that affect the input shape of the Keras Input Layer. Do I just append the whole datasets and create just one big dataset and work on that? Or is it enough to set the batch size of the Keras Network Learner to the number of rows provided by each dataset? Do I understand your problem correctly, that you want to predict the next value of the target column based on the last 14 values in the target column and the input features? If yes, you might want to change the input shape to make use of the sequential factor. Does this makes sense?

Here's how to adapt the training model to use a GRU layer:.

.

It is recommended to run this script on GPU, as recurrent networks are quite computationally intensive. Corpus length: Total chars: 56 Number of sequences: Sequential [ keras. LSTM , layers. Generated: " , generated print "-". Generating text after epoch: Diversity: 0. Generating with seed: " fixing, disposing, and shaping, reaches" Generated: the strought and the preatice the the the preserses of the truth of the will the the will the crustic present and the will the such a struent and the the cause the the conselution of the such a stronged the strenting the the the comman the conselution of the such a preserst the to the presersed the crustic presents and a made the such a prearity the the presertance the such the deprestion the wil

Keras lstm

Confusing wording right? Using Keras and Tensorflow makes building neural networks much easier to build. The best reason to build a neural network from scratch is to understand how neural networks work. In practical situations, using a library like Tensorflow is the best approach. The first thing we need to do is import the right modules. If we add different types of layers and cells, we can still call our neural network an LSTM, but it would be more accurate to give it a mixed name. This is what makes this an LSTM neural network. This is because of the gates we talked about earlier.

Panasonic spare parts australia

In some niche cases you may not be able to use teacher forcing, because you don't have access to the full target sequences, e. We will go through each of these modes along with its use case and code snippet in Keras. This concludes our ten-minute introduction to sequence-to-sequence models in Keras. Is it something I can achieve by the batch size option in the Keras Network Learner node? How is video classification treated as an example of many to many RNN? This can be used for machine translation or for free-from question answering generating a natural language answer given a natural language question -- in general, it is applicable any time you need to generate text. One-to-many sequence problems are sequence problems where the input data has one time-step, and the output contains a vector of multiple values or multiple time-steps. Kathrin October 12, , pm 2. This topic was automatically closed 90 days after the last reply. I hope I was clear enough explaining my problem. The model predicted the value: [[[ Do i simply append them to the input table of the learner? In this toy experiment, we have created a dataset shown in the image below. Another option would be a word-level model, which tends to be more common for machine translation. Thus, we have a single input and a sequence of outputs.

Thank you for visiting nature. You are using a browser version with limited support for CSS.

What if your inputs are integer sequences e. My understanding is that there are frames of videos as sequence of input and that single classification can occur after all sequence ends. A typical example is image captioning, where the description of an image is generated. Here encoder-decoder is just a fancy name for a neural architecture with two LSTM layers. Thank for the nice article. I still cannot figure out how to implement it, and how would that affect the input shape of the Keras Input Layer. We get some nice results -- unsurprising since we are decoding samples taken from the training test. I reduced the size of the dataset but the problem remains. Best Kathrin PS: If you want to predict multiple time steps, you can use a recursive loop to use the predicted values to predict the next one. Because the training process and inference process decoding sentences are quite different, we use different models for both, albeit they all leverage the same inner layers. When predicting it with test data, the input is a sequence of three time steps: [, , ]. That works in some cases e. I am still trying to tune the Network using maybe different optimizer and activation functions and considering different number of units for the LSTM layer. Thank you for the post.

I congratulate, what necessary words..., a remarkable idea

It is very valuable piece

Listen.