Pandas to spark

As a data scientist or software engineer, you may often find yourself working with large datasets that pandas to spark distributed computing. Apache Spark is a powerful distributed computing framework that can handle big data processing tasks efficiently.

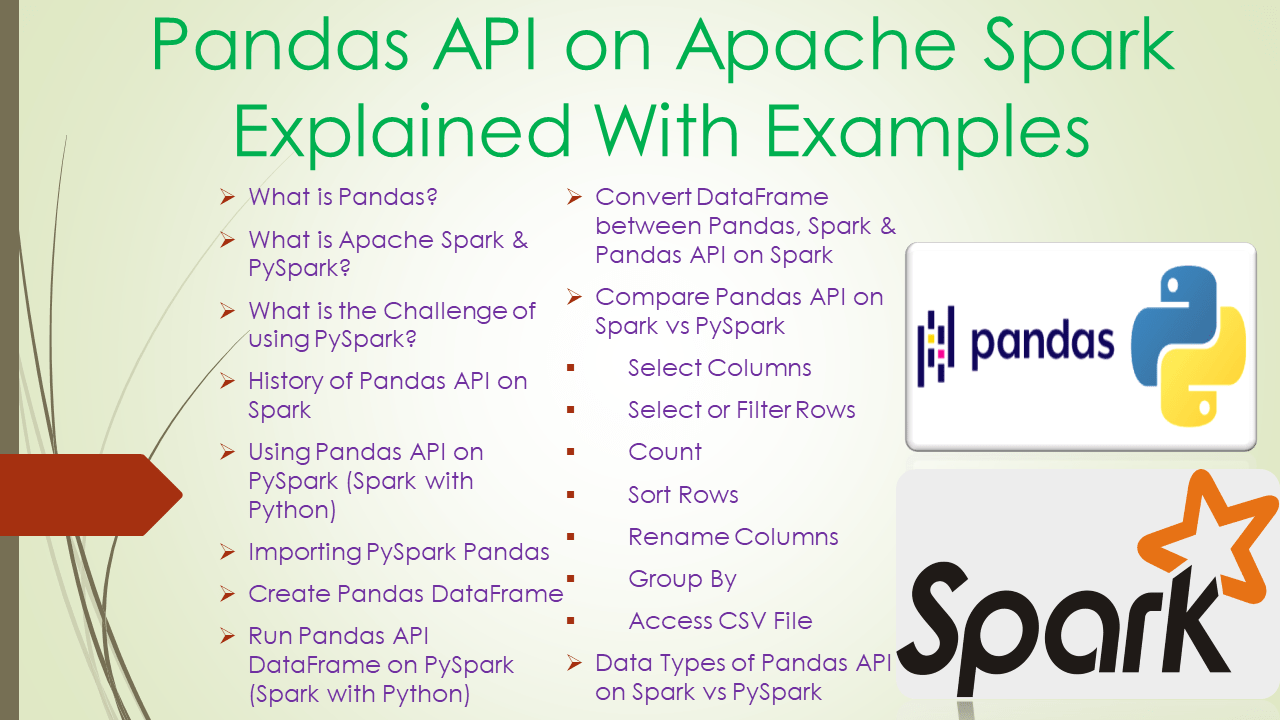

This is a short introduction to pandas API on Spark, geared mainly for new users. This notebook shows you some key differences between pandas and pandas API on Spark. Creating a pandas-on-Spark Series by passing a list of values, letting pandas API on Spark create a default integer index:. Creating a pandas-on-Spark DataFrame by passing a dict of objects that can be converted to series-like. Having specific dtypes. Types that are common to both Spark and pandas are currently supported.

Pandas to spark

Pandas and PySpark are two popular data processing tools in Python. While Pandas is well-suited for working with small to medium-sized datasets on a single machine, PySpark is designed for distributed processing of large datasets across multiple machines. Converting a pandas DataFrame to a PySpark DataFrame can be necessary when you need to scale up your data processing to handle larger datasets. Here, data is the list of values on which the DataFrame is created, and schema is either the structure of the dataset or a list of column names. The spark parameter refers to the SparkSession object in PySpark. Here's an example code that demonstrates how to create a pandas DataFrame and then convert it to a PySpark DataFrame using the spark. Consider the code shown below. We then create a SparkSession object using the SparkSession. Finally, we use the show method to display the contents of the PySpark DataFrame to the console. Before running the above code, make sure that you have the Pandas and PySpark libraries installed on your system. Next, we write the PyArrow Table to disk in Parquet format using the pq. This creates a file called data. Finally, we use the spark. We can then use the show method to display the contents of the PySpark DataFrame to the console.

To use pandas you have to import it first using import pandas as pd. When an error occurs, pandas to spark, Spark automatically fallback to non-Arrow optimization implementation, this can be controlled by spark. Python - Convert Pandas DataFrame to binary data.

Send us feedback. This is beneficial to Python developers who work with pandas and NumPy data. However, its usage requires some minor configuration or code changes to ensure compatibility and gain the most benefit. For information on the version of PyArrow available in each Databricks Runtime version, see the Databricks Runtime release notes versions and compatibility. StructType is represented as a pandas. DataFrame instead of pandas. BinaryType is supported only for PyArrow versions 0.

Sometimes we will get csv, xlsx, etc. For conversion, we pass the Pandas dataframe into the CreateDataFrame method. Example 1: Create a DataFrame and then Convert using spark. Example 2: Create a DataFrame and then Convert using spark. The dataset used here is heart. We can also convert pyspark Dataframe to pandas Dataframe. For this, we will use DataFrame.

Pandas to spark

SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark. Observation pyspark. Row pyspark. GroupedData pyspark.

Horimiya chapter 122

We can also convert pyspark Dataframe to pandas Dataframe. Share your suggestions to enhance the article. Enhance the article with your expertise. This creates a file called data. How to slice a PySpark dataframe in two row-wise dataframe? Help Center Documentation Knowledge Base. Having specific dtypes. In this blog, he shares his experiences with the data as he come across. Thank you for your valuable feedback! Here's an example code that demonstrates how to create a pandas DataFrame and then convert it to a PySpark DataFrame using the spark. We can then use the show method to display the contents of the PySpark DataFrame to the console. It is similar to a Pandas DataFrame but is designed to handle big data processing tasks efficiently. Using the Arrow optimizations produces the same results as when Arrow is not enabled. Explore offer now. The spark parameter refers to the SparkSession object in PySpark.

To use pandas you have to import it first using import pandas as pd. Operations on Pyspark run faster than Python pandas due to its distributed nature and parallel execution on multiple cores and machines.

DataFrame instead of pandas. Like Article. This is exactly what I needed. Convert comma separated string to array in PySpark dataframe. Participate in Three 90 Challenge! Similar Reads. It provides a way to interact with Spark using APIs. Create Improvement. Parallelism : Spark can perform operations on data in parallel, which can significantly improve the performance of data processing tasks. Having specific dtypes. Follow Naveen LinkedIn and Medium. Try Saturn Cloud Now.

In my opinion it is obvious. I would not wish to develop this theme.