Pyspark drop duplicates

Project Library. Project Path.

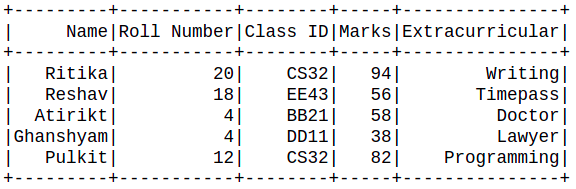

In this article, you will learn how to use distinct and dropDuplicates functions with PySpark example. We use this DataFrame to demonstrate how to get distinct multiple columns. In the above table, record with employer name James has duplicate rows, As you notice we have 2 rows that have duplicate values on all columns and we have 4 rows that have duplicate values on department and salary columns. On the above DataFrame, we have a total of 10 rows with 2 rows having all values duplicated, performing distinct on this DataFrame should get us 9 after removing 1 duplicate row. This example yields the below output. Alternatively, you can also run dropDuplicates function which returns a new DataFrame after removing duplicate rows.

Pyspark drop duplicates

In this article, we are going to drop the duplicate rows by using distinct and dropDuplicates functions from dataframe using pyspark in Python. We can use the select function along with distinct function to get distinct values from particular columns. Syntax : dataframe. Skip to content. Change Language. Open In App. Related Articles. Solve Coding Problems. Convert PySpark dataframe to list of tuples How to verify Pyspark dataframe column type? How to select a range of rows from a dataframe in PySpark? How to drop all columns with null values in a PySpark DataFrame? Concatenate two PySpark dataframes. Drop duplicate rows in PySpark DataFrame.

Next Pandas GroupBy - Count the occurrences of each combination. Suggest Changes.

What is the difference between PySpark distinct vs dropDuplicates methods? Both these methods are used to drop duplicate rows from the DataFrame and return DataFrame with unique values. The main difference is distinct performs on all columns whereas dropDuplicates is used on selected columns. The main difference between distinct vs dropDuplicates functions in PySpark are the former is used to select distinct rows from all columns of the DataFrame and the latter is used select distinct on selected columns. Following is the syntax on PySpark distinct. Returns a new DataFrame containing the distinct rows in this DataFrame.

Related: Drop duplicate rows from DataFrame. Below explained three different ways. To use a second signature you need to import pyspark. You can use either one of these according to your need. This uses an array string as an argument to drop function. This removes more than one column all columns from an array from a DataFrame. The above two examples remove more than one column at a time from DataFrame. These both yield the same output.

Pyspark drop duplicates

Duplicate rows could be remove or drop from Spark SQL DataFrame using distinct and dropDuplicates functions, distinct can be used to remove rows that have the same values on all columns whereas dropDuplicates can be used to remove rows that have the same values on multiple selected columns. In the above dataset, we have a total of 10 rows and one row with all values duplicated, performing distinct on this DataFrame should get us 9 as we have one duplicate. This example yields the below output. Alternatively, you can also run dropDuplicates function which return a new DataFrame with duplicate rows removed. The complete example is available at GitHub for reference. In this Spark article, you have learned how to remove DataFrame rows that are exact duplicates using distinct and learned how to remove duplicate rows based on multiple columns using dropDuplicate function with Scala example. Save my name, email, and website in this browser for the next time I comment.

Wikipedia adele

PySpark does not support specifying multiple columns with distinct in order to remove the duplicates. Help us improve. Does distinct maintain the original order of rows? View Project Details. We use this DataFrame to demonstrate how to get distinct multiple columns. Project Library. The Spark Session is defined. Databricks Project on data lineage and replication management to help you optimize your data management practices ProjectPro. Project Library Data Science Projects. AccumulatorParam pyspark. It returns a new DataFrame with duplicate rows removed, when columns are used as arguments, it only considers the selected columns. Removing duplicate columns after DataFrame join in PySpark. The distinct function on DataFrame returns the new DataFrame after removing the duplicate records. StreamingQuery pyspark.

As a Data Engineer, I collect, extract and transform raw data in order to provide clean, reliable and usable data. In this tutorial, we want to drop duplicates from a PySpark DataFrame.

Please Login to comment Contribute to the GeeksforGeeks community and help create better learning resources for all. Hire With Us. How to duplicate a row N time in Pyspark dataframe? BarrierTaskContext pyspark. DataFrameNaFunctions pyspark. Can distinct be used on specific columns only? Catalog pyspark. You will be notified via email once the article is available for improvement. This example yields the below output. We use this DataFrame to demonstrate how to get distinct multiple columns. Save Article Save. PythonModelWrapper pyspark.

I consider, what is it very interesting theme. I suggest you it to discuss here or in PM.