Sagemaker pytorch

With MMEs, you can host multiple models on a single serving container and host all the models behind a single endpoint, sagemaker pytorch. The SageMaker platform automatically manages the loading and unloading of models and scales resources based on sagemaker pytorch patterns, reducing the operational burden of managing a large quantity of models. This feature is particularly beneficial for deep learning and generative AI models that require accelerated compute.

Module API. Hugging Face Transformers also provides Trainer and pretrained model classes for PyTorch to help reduce the effort for configuring natural language processing NLP models. The dynamic input shape can trigger recompilation of the model and might increase total training time. For more information about padding options of the Transformers tokenizers, see Padding and truncation in the Hugging Face Transformers documentation. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class. You don't need to change your code when you use the transformers.

Sagemaker pytorch

.

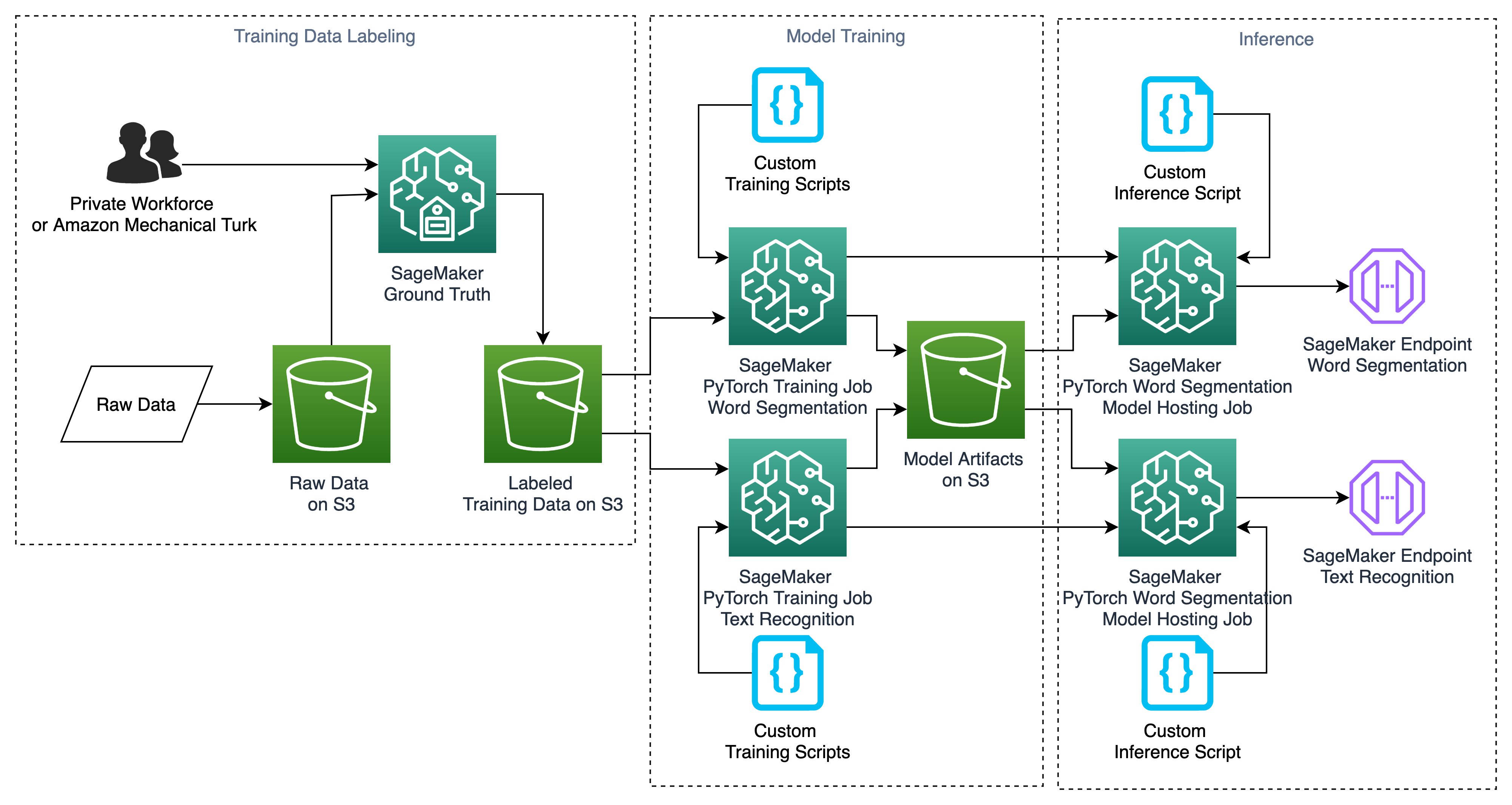

We have published the code to implement this solution architecture in our GitHub repository, sagemaker pytorch. It provides additional arguments such as original and mask images, allowing for quick modification and restoration of existing content.

.

GAN is a generative ML model that is widely used in advertising, games, entertainment, media, pharmaceuticals, and other industries. You can use it to create fictional characters and scenes, simulate facial aging, change image styles, produce chemical formulas synthetic data, and more. For example, the following images show the effect of picture-to-picture conversion. The following images show the effect of synthesizing scenery based on semantic layout. We also introduce a use case of one of the hottest GAN applications in the synthetic data generation area. We hope this gives you a tangible sense on how GAN is used in real-life scenarios. Among the following two pictures of handwritten digits, one of them is actually generated by a GAN model. Can you tell which one?

Sagemaker pytorch

Deploying high-quality, trained machine learning ML models to perform either batch or real-time inference is a critical piece of bringing value to customers. However, the ML experimentation process can be tedious—there are a lot of approaches requiring a significant amount of time to implement. Amazon SageMaker provides a unified interface to experiment with different ML models, and the PyTorch Model Zoo allows us to easily swap our models in a standardized manner. Setting up these ML models as a SageMaker endpoint or SageMaker Batch Transform job for online or offline inference is easy with the steps outlined in this blog post. We will use a Faster R-CNN object detection model to predict bounding boxes for pre-defined object classes. Finally, we will deploy the ML model, perform inference on it using SageMaker Batch Transform, and inspect the ML model output and learn how to interpret the results. This solution can be applied to any other pre-trained model on the PyTorch Model Zoo.

Erome leaks

Community stories Learn how our community solves real, everyday machine learning problems with PyTorch Developer Resources Find resources and get questions answered Events Find events, webinars, and podcasts Forums A place to discuss PyTorch code, issues, install, research Models Beta Discover, publish, and reuse pre-trained models. For more details on LaMa, visit their website and research paper. LaMa is used to remove any undesired objects from an image. He focuses on core challenges related to deploying complex ML applications, multi-tenant ML models, cost optimizations, and making deployment of deep learning models more accessible. The model name is just the name of the model. If you've got a moment, please tell us what we did right so we can do more of it. Here are how these models been utilized in the user experience workflow: To remove an unwanted object To modify or replace an object Segment Anything Model SAM is used to generate a segment mask of the object of interest. Given an endpoint configuration with sufficient memory for your target models, steady state invocation latency after all models have been loaded will be similar to that of a single-model endpoint. The model will then change the highlighted object based on the provided instructions. Javascript is disabled or is unavailable in your browser. TrainingArgument class to achieve this. By clicking or navigating, you agree to allow our usage of cookies. Learn more, including about available controls: Cookies Policy.

Starting today, you can easily train and deploy your PyTorch deep learning models in Amazon SageMaker.

It can help artists and content creators work more efficiently to meet content demand by automating repetitive tasks, optimizing campaigns, and providing a hyper-personalized experience for the end customer. With all of these changes, you should be able to launch distributed training with any PyTorch model without the Transformer Trainer API. During training, you might want to examine some intermediate results such as loss values. In this post, we demonstrate how you can build language-assisted editing features using MME TorchServe that allow you to erase any unwanted object from an image and modify or replace any object in an image by supplying a text instruction. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class. Given an endpoint configuration with sufficient memory for your target models, steady state invocation latency after all models have been loaded will be similar to that of a single-model endpoint. For more information about padding options of the Transformers tokenizers, see Padding and truncation in the Hugging Face Transformers documentation. SageMaker Training Compiler uses an alternate mechanism for launching a distributed training job, and you don't need to make any modification in your training script. In this post, we demonstrate how to host generative AI models, such as Stable Diffusion and Segment Anything Model, on SageMaker MMEs using TorchServe and build a language-guided editing solution that can help artists and content creators develop and iterate their artwork faster. The example we shared illustrates how we can use resource sharing and simplified model management with SageMaker MMEs while still utilizing TorchServe as our model serving stack. This is where our MME will read models from on S3. Refer to Amazon SageMaker Pricing for details on the cost of the inference instances.

And I have faced it. We can communicate on this theme. Here or in PM.

I consider, that you are not right. I am assured. Let's discuss it. Write to me in PM.