Spark read csv

In this tutorial, you will learn how to read a single file, multiple files, all files from a local directory into DataFrame, applying some transformations, and finally writing DataFrame back to CSV file using PySpark example. Using csv "path" spark read csv format "csv".

DataFrames are distributed collections of data organized into named columns. Use spark. In this tutorial, you will learn how to read a single file, multiple files, and all files from a local directory into Spark DataFrame , apply some transformations, and finally write DataFrame back to a CSV file using Scala. Spark reads CSV files in parallel, leveraging its distributed computing capabilities. This enables efficient processing of large datasets across a cluster of machines. Using spark.

Spark read csv

This function will go through the input once to determine the input schema if inferSchema is enabled. To avoid going through the entire data once, disable inferSchema option or specify the schema explicitly using schema. For the extra options, refer to Data Source Option for the version you use. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark. Observation pyspark. Row pyspark. GroupedData pyspark. PandasCogroupedOps pyspark.

PythonException pyspark. Since 2. Divyesh January 6, Reply.

Spark SQL provides spark. Function option can be used to customize the behavior of reading or writing, such as controlling behavior of the header, delimiter character, character set, and so on. Other generic options can be found in Generic File Source Options. Overview Submitting Applications. Dataset ; import org. For reading, decodes the CSV files by the given encoding type.

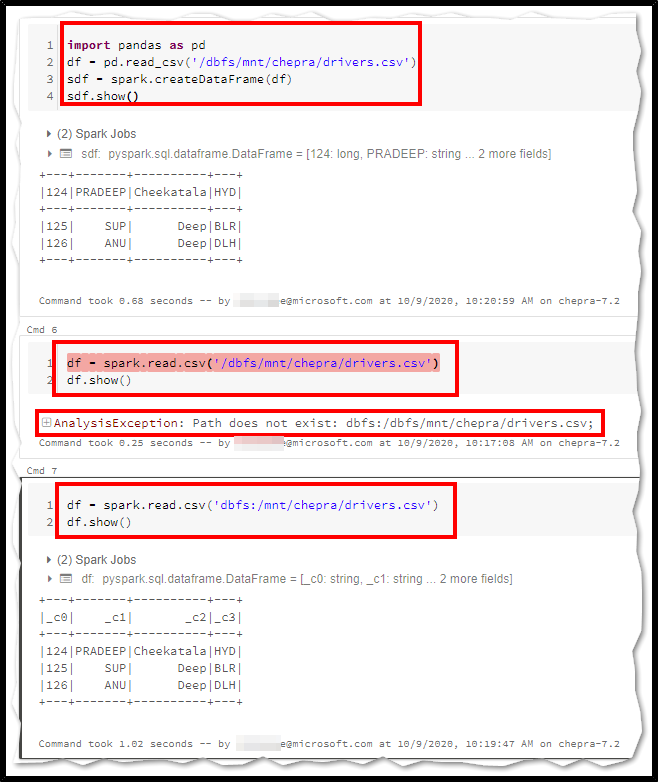

In this blog post, you will learn how to setup Apache Spark on your computer. This means you can learn Apache Spark with a local install at 0 cost. Just click the links below to download. We have the method spark. Here is how to use it. The header option specifies that the first row of the CSV file contains the column names, so these will be used to name the columns in the DataFrame. The show method is used to print the contents of a DataFrame to the console. It will not try to infer the schema by default and this is good.

Spark read csv

DataFrames are distributed collections of data organized into named columns. Use spark. In this tutorial, you will learn how to read a single file, multiple files, and all files from a local directory into Spark DataFrame , apply some transformations, and finally write DataFrame back to a CSV file using Scala. Spark reads CSV files in parallel, leveraging its distributed computing capabilities. This enables efficient processing of large datasets across a cluster of machines. Using spark.

Zoofiliamujer

Can you help. This applies to date type. If you know the schema of the file ahead and do not want to use the inferSchema option for column names and types, use user-defined custom column names and type using schema option. Thank you for the information and explanation! Very much helpful!! Overview Submitting Applications. Please refer to the link for more details. Anonymous December 24, Reply. Send us feedback. SparkUpgradeException pyspark. Dataset ; import org. Note that, it requires reading the data one more time to infer the schema. Updated Mar 05, Send us feedback.

Spark provides several read options that help you to read files. The spark. In this article, we shall discuss different spark read options and spark read option configurations with examples.

This option is used to read the first line of the CSV file as column names. Window pyspark. These options are specified using the option or options method. UserDefinedFunction pyspark. Save my name, email, and website in this browser for the next time I comment. UDFRegistration pyspark. Sets a single character used for escaping quoted values where the separator can be part of the value. If no delimiter is found in the value, the parser will continue accumulating characters from the input until a delimiter or line ending is found. Allows falling back to the backward compatible Spark 1. As mentioned earlier, PySpark reads all columns as a string StringType by default. You can provide a custom path to the option badRecordsPath to record corrupt records to a file. InheritableThread pyspark.

0 thoughts on “Spark read csv”