Tacotron 2 github

Tacotron 2 - PyTorch implementation with faster-than-realtime inference. This implementation includes distributed and automatic mixed precision support and uses the LJSpeech dataset.

This Repository contains a sample code for Tacotron 2, WaveGlow with multi-speaker, emotion embeddings together with a script for data preprocessing. Checkpoints and code originate from following sources:. The following section lists the requirements in order to start training the Tacotron 2 and WaveGlow models. Aside from these dependencies, ensure you have the following components:. Folders tacotron2 and waveglow have scripts for Tacotron 2, WaveGlow models and consist of:. On training or data processing start, parameters are copied from your experiment in our case - from waveglow. Since both scripts waveglow.

Tacotron 2 github

Tensorflow implementation of DeepMind's Tacotron Suggested hparams. Feel free to toy with the parameters as needed. The previous tree shows the current state of the repository separate training, one step at a time. Step 1 : Preprocess your data. Step 2 : Train your Tacotron model. Yields the logs-Tacotron folder. Step 4 : Train your Wavenet model. Yield the logs-Wavenet folder. Step 5 : Synthesize audio using the Wavenet model. Pre-trained models and audio samples will be added at a later date. You can however check some primary insights of the model performance at early stages of training here. To have an in-depth exploration of the model architecture, training procedure and preprocessing logic, refer to our wiki.

However, they didn't release their source code or training data. Here is the expected loss curve when training on LJ Speech with the default hyperparameters:. Skip to content.

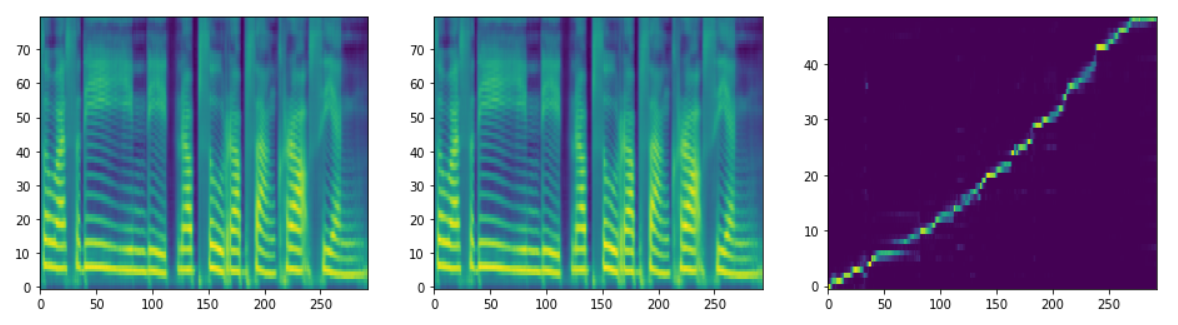

Yet another PyTorch implementation of Tacotron 2 with reduction factor and faster training speed. The project is highly based on these. I made some modification to improve speed and performance of both training and inference. Currently only support LJ Speech. You can modify hparams. You can find alinment images and synthesized audio clips during training. The text to synthesize can be set in hparams.

While browsing the Internet, I have noticed a large number of people claiming that Tacotron-2 is not reproducible, or that it is not robust enough to work on other datasets than the Google internal speech corpus. Although some open-source works 1 , 2 has proven to give good results with the original Tacotron or even with Wavenet , it still seemed a little harder to reproduce the Tacotron 2 results with high fidelity to the descriptions of Tacotron-2 T2 paper. In this complementary documentation, I will mostly try to cover some ambiguities where understandings might differ and proving in the process that T2 actually works with open source speech corpus like Ljspeech dataset. Also, due to the limitation in size of the paper, authors can't get in much detail so they usually reference to previous works, in this documentation I did the job of extracting the relevant information from the references to make life a bit easier. Last but not least, despite only being released now, this documentation has mostly been written in parallel with development so pardon the disorder, I did my best to make it clear enough. Also feel free to correct any mistakes you might encounter or contribute with any added value experiments results, plots, etc. Skip to content. You signed in with another tab or window. Reload to refresh your session.

Tacotron 2 github

Yet another PyTorch implementation of Tacotron 2 with reduction factor and faster training speed. The project is highly based on these. I made some modification to improve speed and performance of both training and inference. Currently only support LJ Speech. You can modify hparams.

Celf 5

You switched accounts on another tab or window. A TensorFlow implementation of Google's Tacotron speech synthesis with pre-trained model unofficial. BSDClause license. You switched accounts on another tab or window. Skip to content. This implementation uses code from the following repos: Keith Ito , Prem Seetharaman as described in our code. You signed out in another tab or window. You can find alinment images and synthesized audio clips during training. Install requirements: pip install -r requirements. Feel free to toy with the parameters as needed. Quickstart Dependencies You can install the Python dependencies with pip3 install -r requirements. This implementation uses code from the following repos: Keith Ito , Prem Seetharaman as described in our code. Updated May 27, Python. Updated Jul 19, Python.

The Tacotron 2 and WaveGlow model form a text-to-speech system that enables user to synthesise a natural sounding speech from raw transcripts without any additional prosody information.

MIT license. This is an independent attempt to provide an open-source implementation of the model described in their paper. This Repository contains a sample code for Tacotron 2, WaveGlow with multi-speaker, emotion embeddings together with a script for data preprocessing. Updated May 27, Python. Updated Jul 19, Python. Contributors 8. The model can learn alignment only in 5k. This code works with TensorFlow 1. This implementation uses code from the following repos: Keith Ito , Prem Seetharaman as described in our code. WaveGlow also available via torch. Before proceeding, you must pick the hyperparameters that suit best your needs. Star 3. Reload to refresh your session.

0 thoughts on “Tacotron 2 github”