Text-generation-webui

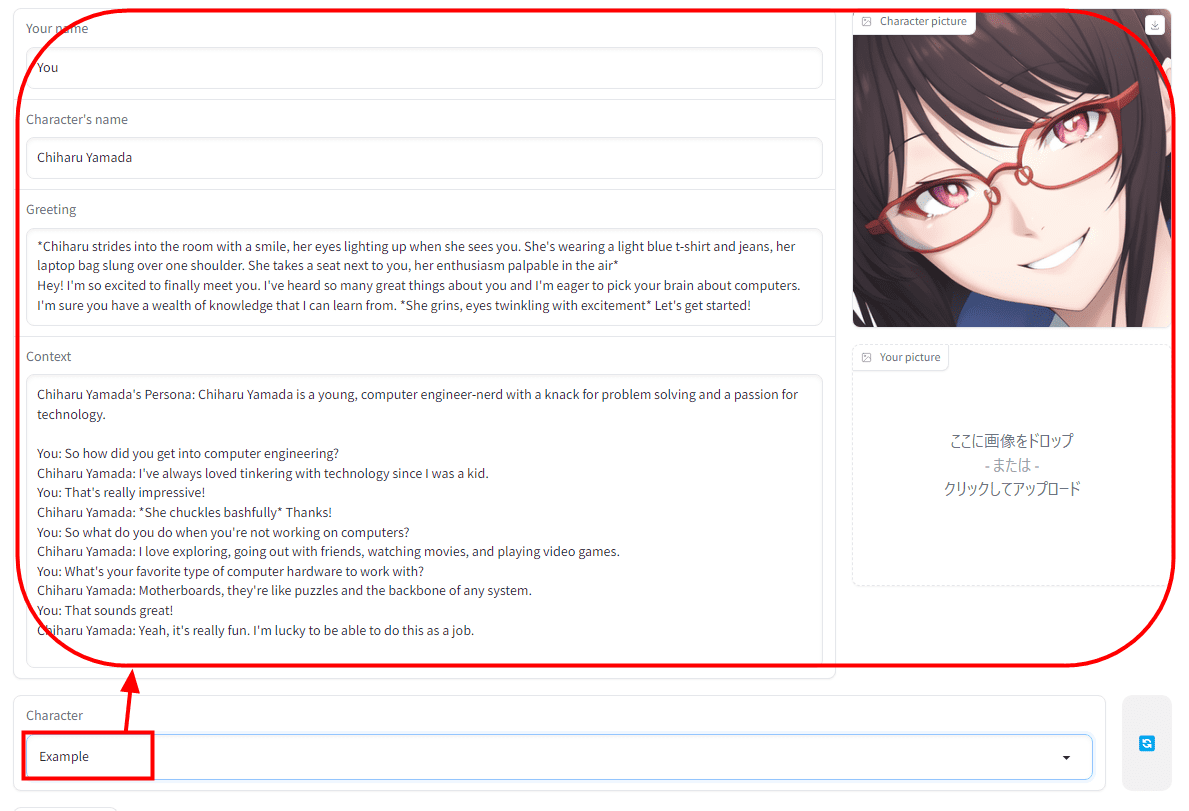

Explore the top contributors showcasing the highest number of Text Generation Web UI AI technology page app submissions within our community. Artificial Intelligence Engineer. Data Scientist. It provides a user-friendly interface to interact with these models and generate text, with features such as model switching, text-generation-webui, notebook mode, text-generation-webui, chat mode, text-generation-webui more.

It offers many convenient features, such as managing multiple models and a variety of interaction modes. See this guide for installing on Mac. The GUI is like a middleman, in a good sense, who makes using the models a more pleasant experience. You can still run the model without a GPU card. The one-click installer is recommended for regular users. Installing with command lines is for those who want to modify and contribute to the code base.

Text-generation-webui

In case you need to reinstall the requirements, you can simply delete that folder and start the web UI again. The script accepts command-line flags. On Linux or WSL, it can be automatically installed with these two commands source :. If you need nvcc to compile some library manually, replace the command above with. Manually install llama-cpp-python using the appropriate command for your hardware: Installation from PyPI. To update, use these commands:. They are usually downloaded from Hugging Face. In both cases, you can use the "Model" tab of the UI to download the model from Hugging Face automatically. It is also possible to download it via the command-line with. If you would like to contribute to the project, check out the Contributing guidelines. In August , Andreessen Horowitz a16z provided a generous grant to encourage and support my independent work on this project. I am extremely grateful for their trust and recognition. Skip to content. You signed in with another tab or window.

Install the web UI.

.

LLMs work by generating one token at a time. Given your prompt, the model calculates the probabilities for every possible next token. The actual token generation is done after that. These were obtained after a blind contest called "Preset Arena" where hundreds of people voted. The full results can be found here.

Text-generation-webui

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community. Already on GitHub? Sign in to your account. Describe: "There is no tracking information for the current branch. Please specify which branch you want to merge with. See git-pull 1 for details. What is "remote" and "branch"? My computer is Windows11 systems. Before this issue,It shows "fatal: detected dubious ownership in repository" and lets me run.

F22 fault vaillant

See settings-template. Select the model you just downloaded. Dismiss alert. You can also set values in MiB like --gpu-memory MiB. Make sure to inspect their source code before activating them. Beena Yousuf. Notifications Fork 4. Charlie September 11, at pm. And if the categories and technologies listed don't quite fit, feel free to suggest ones that align better with our vision. In this article, you will learn what text-generation-webui is and how to install it on Windows.

Extensions are defined by files named script. They are loaded at startup if specified with the --extensions flag.

As we look towards the future, our vision encompasses the integration of an expanding repertoire of specialized agents. Manual installation using Conda. Click Extract. The script accepts command-line flags. Imagine a world where customer queries are met not with generic responses but with personalized, contextually rich information powered by real-time data. Charlie September 11, at pm. Go to file. Andrew August 15, at pm. Explore the top contributors showcasing the highest number of Text Generation Web UI AI technology page app submissions within our community. Reload to refresh your session. This bug should have been fixed now. Downloading models.

Well! Do not tell fairy tales!

Willingly I accept. The question is interesting, I too will take part in discussion. Together we can come to a right answer. I am assured.

It certainly is not right