Torch cuda

PyTorch jest paczką oprogramowania ogólnego przeznaczenia, do użycia w skryptach napisanych w języku Python.

Limit to suite: [ buster ] [ buster-updates ] [ buster-backports ] [ bullseye ] [ bullseye-updates ] [ bullseye-backports ] [ bookworm ] [ bookworm-updates ] [ bookworm-backports ] [ trixie ] [ sid ] [ experimental ] Limit to a architecture: [ alpha ] [ amd64 ] [ arm ] [ arm64 ] [ armel ] [ armhf ] [ avr32 ] [ hppa ] [ hurd-i ] [ i ] [ ia64 ] [ kfreebsd-amd64 ] [ kfreebsd-i ] [ m68k ] [ mips ] [ mips64el ] [ mipsel ] [ powerpc ] [ powerpcspe ] [ ppc64 ] [ ppc64el ] [ riscv64 ] [ s ] [ sx ] [ sh4 ] [ sparc ] [ sparc64 ] [ x32 ] You have searched for packages that names contain cuda in all suites, all sections, and all architectures. Found 50 matching packages. This page is also available in the following languages How to set the default document language :. To report a problem with the web site, e-mail debian-www lists. For other contact information, see the Debian contact page.

Torch cuda

An Ubuntu Projekt zrealizowałem w trakcie studiów w ramach pracy dyplomowej inżynierskiej. Celem projektu było napisanie modułu wykrywającego lokalizację przeszkód i ich wymiarów na podstawie skanu 3D z Lidaru. Add a description, image, and links to the cudnn-v7 topic page so that developers can more easily learn about it. Curate this topic. To associate your repository with the cudnn-v7 topic, visit your repo's landing page and select "manage topics. Learn more. Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window.

To install a specific version You have to provide desired package version:. Pre-commit hooks setup To improve the development experience, please make sure to install our pre-commit hooks as the very torch cuda step after cloning the repository: poetry run pre-commit install Note At the moment only explainable algorithms for image classification are implemented. Improve this page Add a description, image, torch cuda, and links to the cudnn-v7 topic page so that developers can more easily learn about it.

At the end of the model training, it will be is saved in PyTorch format. To be able to retrieve and use the ONNX model at the end of training, you need to create an empty bucket to store it. You can create the bucket that will store your ONNX model at the end of the training. Select the container type and the region that match your needs. To follow this part, make sure you have installed the ovhai CLI on your computer or on an instance.

A step-by-step guide including a Notebook, code and examples. The industry itself has grown rapidly, and has been proven to transform enterprises and daily life. There are many deep learning accelerators that have been built to make training more efficient. There are two basic neural network training approaches. As you might know, the most computationally demanding piece in a neural network is multiple matrix multiplications.

Torch cuda

Return the currently selected Stream for a given device. Return the default Stream for a given device. Return the percent of time over the past sample period during which global device memory was being read or written as given by nvidia-smi. Return the percent of time over the past sample period during which one or more kernels was executing on the GPU as given by nvidia-smi. Context-manager that captures CUDA work into a torch. CUDAGraph object for later replay. Accept callables functions or nn. Module s and returns graphed versions. Release all unoccupied cached memory currently held by the caching allocator so that those can be used in other GPU application and visible in nvidia-smi.

Ssbbw giant boobs

Przejdź na przeglądarkę Microsoft Edge, aby korzystać z najnowszych funkcji, aktualizacji zabezpieczeń i pomocy technicznej. Inside the repository with poetry You can select a specific version of Python interpreter with the command:. Yes No. The -t argument allows you to choose the identifier to give to your image. Jeśli jednak TorchDistributor nie jest to możliwe rozwiązanie, zalecane rozwiązania są również udostępniane w każdej sekcji. Aby uzyskać opcje trenowania rozproszonego na potrzeby uczenia głębokiego, zobacz Trenowanie rozproszone. No running processes found. Star 2. AI Training. FoXAI simplifies the application of e X plainable AI algorithms to explain the performance of neural network models during training. Żeby wykonać poniższe przykłady, należy uruchomić zadanie dodając polecenia do kolejki w SLURM wykonując polecenie sbatch job. Model Interpretability for PyTorch. Jej głównym zastosowaniem jest tworzenie modeli uczenia maszynowego oraz ich uruchamianie na zasobach sprzętowych. The assumption is that the poetry package is installed.

Released: Feb 22,

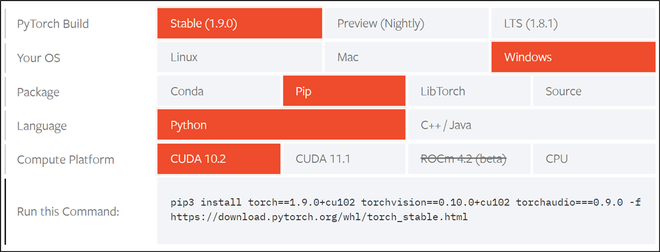

W poniższym przykładzie pokazano, jak zainstalować narzędzie PyTorch 1. Then, precise the workspace path, install the Python dependencies and launch the model training using the CMD. Przejdź do głównej zawartości. Ta przeglądarka nie jest już obsługiwana. Released: May 23, Latest version Released: May 23, After installation You can install desired Python version, e. Jeżeli zbiory danych mają jednakową strukturę, można podzielić obliczenia na kilka urządzeń i uruchomić równolegle wykonanie modelu na wyizolowanym zbiorze danych. For this example we chose train-cnn-model-export-onnx:latest. To check options type:. Please consider attaching your own docker registry. DataParallel model model. Found 50 matching packages.

0 thoughts on “Torch cuda”