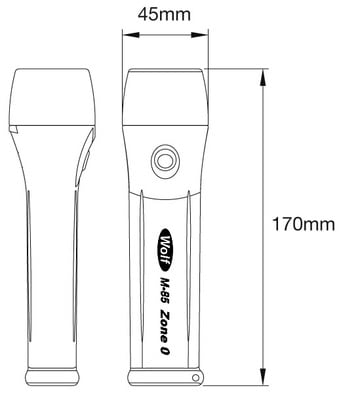

Torch size

In PyTorch, a tensor is a multi-dimensional array containing elements of a single data type.

Introduction to PyTorch on YouTube. Deploying PyTorch Models in Production. Parallel and Distributed Training. Click here to download the full example code. Follow along with the video below or on youtube. Tensors are the central data abstraction in PyTorch.

Torch size

.

Note that these in-place arithmetic functions are methods on the torch. To get the shape of a tensor in PyTorch, we can torch size the size method.

.

This is a very quick post in which I familiarize myself with basic tensor operations in PyTorch while also documenting and clarifying details that initially confused me. As you may realize, some of these points of confusion are rather minute details, while others concern important core operations that are commonly used. This document may grow as I start to use PyTorch more extensively for training or model implementation. There appear to be two ways of specifying the size of a tensor. Using torch. It confused me how the two yielded identical results. Indeed, we can even verify that the two tensors are identical via. I thought different behaviors would be expected if I passed in more dimensions, plus some additional arguments like dtype , but this was not true. The conclusion of this analysis is that the two ways of specifying the size of a tensor are exactly identical. However, one note of caution is that NumPy is more opinionated than PyTorch and exclusively favors the tuple approach over the unpacked one.

Torch size

The torch package contains data structures for multi-dimensional tensors and defines mathematical operations over these tensors. Additionally, it provides many utilities for efficient serialization of Tensors and arbitrary types, and other useful utilities. Returns True if the data type of input is a complex data type i. Returns True if the input is a conjugated tensor, i. Returns True if the data type of input is a floating point data type i. Returns True if the input is a single element tensor which is not equal to zero after type conversions. Sets the default floating point dtype to d.

Speed mbappe

This is not strictly necessary - PyTorch will take a series of initial, unlabeled integer arguments as a tensor shape - but when adding the optional arguments, it can make your intent more readable. Below that, we call the. Tensors are the central data abstraction in PyTorch. For d , we switched it around - now every row is identical, across layers and columns. The net effect of that was to broadcast the operation over dimensions 0 and 2, causing the random, 3 x 1 tensor to be multiplied element-wise by every 3-element column in a. Parallel and Distributed Training. This is important: That means any change made to the source tensor will be reflected in the view on that tensor, unless you clone it. Introduction to PyTorch on YouTube. Total running time of the script: 0 minutes 0. Your GPU has dedicated memory attached to it. The resulting output is [2, 3]. Size object to a list of integers, we can use the list method. One case where this happens is at the interface between a convolutional layer of a model and a linear layer of the model - this is common in image classification models.

A torch.

If you have an existing tensor living on one device, you can move it to another with the to method. If your source tensor has autograd, enabled then so will the clone. Parallel and Distributed Training. Recall the example above where we had the following code:. How do we move to the faster hardware? Gallery generated by Sphinx-Gallery. You may only squeeze dimensions of extent 1. See above where we try to squeeze a dimension of size 2 in c , and get back the same shape we started with. There are conditions, beyond the scope of this introduction, where reshape has to return a tensor carrying a copy of the data. Size [3, 1] tensor [[[0.

I confirm. I join told all above. Let's discuss this question.