Vsee face

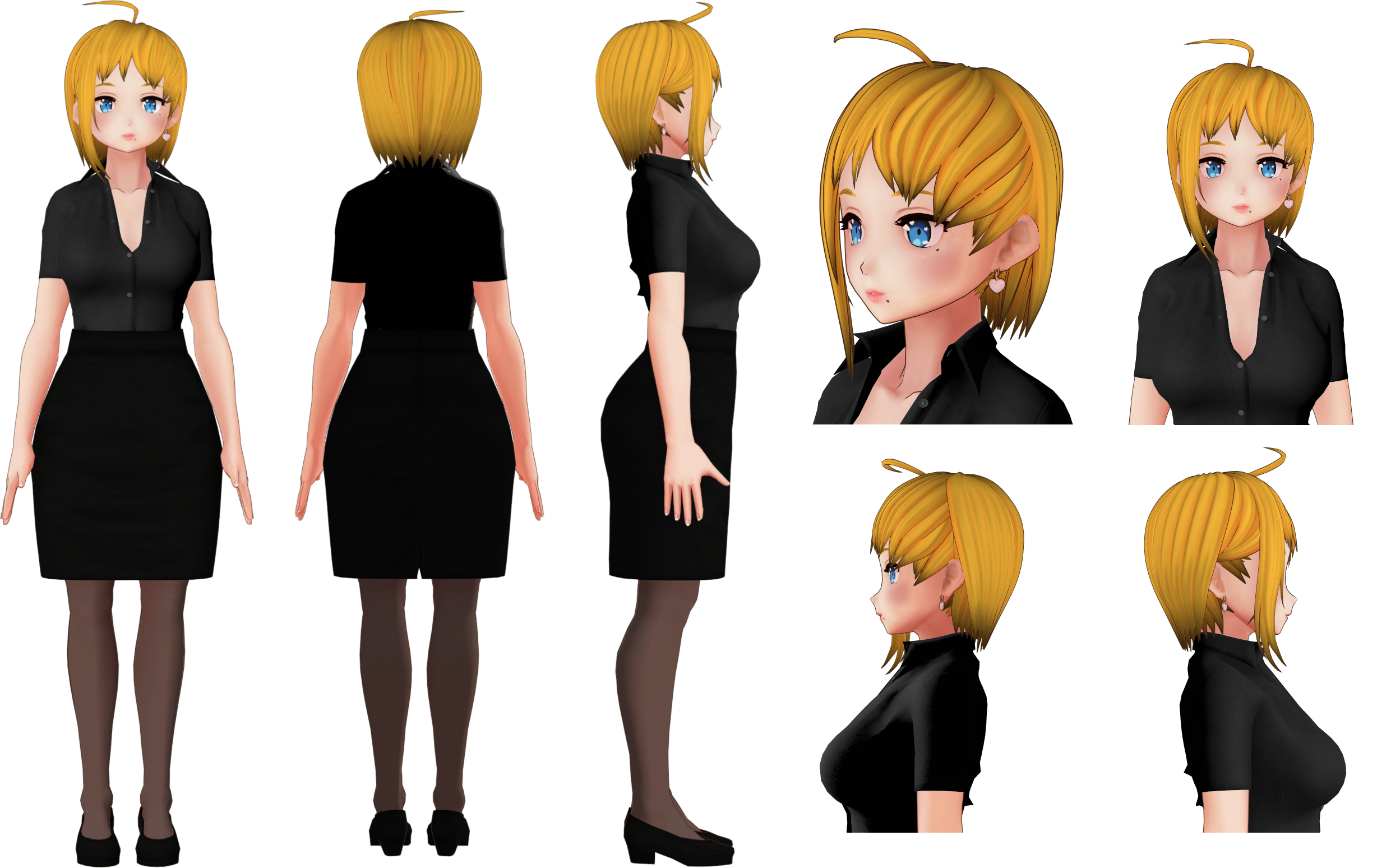

BlendShapeHelper is a UnityScript to help to automaticlly create blendshapes for material toggles. Add a description, image, and links to the vseeface topic page so that developers can more easily learn about it, vsee face.

I am having a major issue and am my wits end. I have uninstalled old versions of Vsee Face, and my Leap Motion software. I have installed the new Leap Motion Gemini software. I have calibrated through visualizer that the software is detecting my hardware. I have downloaded the latest Vsee Face software 1. When launched, I have loaded my avatar, which my face controls work perfectly from. My drivers for my PC, and both software are up to date.

Vsee face

VSeeFace is a free, highly configurable face and hand tracking VRM and VSFAvatar avatar puppeteering program for virtual youtubers with a focus on robust tracking and high image quality. VSeeFace offers functionality similar to Luppet, 3tene, Wakaru and similar programs. VSeeFace runs on Windows 8 and above 64 bit only. Face tracking, including eye gaze, blink, eyebrow and mouth tracking, is done through a regular webcam. For the optional hand tracking, a Leap Motion device is required. You can see a comparison of the face tracking performance compared to other popular vtuber applications here. If you have any questions or suggestions, please first check the FAQ. Please note that Live2D models are not supported. To update VSeeFace, just delete the old folder or overwrite it when unpacking the new version. For details, please see here. Download v1. Just make sure to uninstall any older versions of the Leap Motion software first.

Updated Jun 20, C. While there are free tiers for Live2D integration licenses, adding Live2D support to VSeeFace would vsee face make sense if people could load their own models.

.

Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window. Dismiss alert. Notifications Fork 4 Star

Vsee face

VSeeFace is a free, highly configurable face and hand tracking VRM and VSFAvatar avatar puppeteering program for virtual youtubers with a focus on robust tracking and high image quality. VSeeFace offers functionality similar to Luppet, 3tene, Wakaru and similar programs. VSeeFace runs on Windows 8 and above 64 bit only. Face tracking, including eye gaze, blink, eyebrow and mouth tracking, is done through a regular webcam. For the optional hand tracking, a Leap Motion device is required. You can see a comparison of the face tracking performance compared to other popular vtuber applications here. If you have any questions or suggestions, please first check the FAQ. Please note that Live2D models are not supported.

V34 colour corrector

In some cases extra steps may be required to get it to work. It automatically disables itself when closing VSeeFace to reduce its performance impact, so it has to be manually re-enabled the next time it is used. Notes on running wine: First make sure you have the Arial font installed. After selecting a camera and camera settings, a second window should open and display the camera image with green tracking points on your face. It is offered without any kind of warrenty, so use it at your own risk. The VRM spring bone colliders seem to be set up in an odd way for some exports. You can follow the guide on the VRM website, which is very detailed with many screenshots. If any of the other options are enabled, camera based tracking will be enabled and the selected parts of it will be applied to the avatar. Updated Jan 15, Python. The version number of VSeeFace is part of its title bar, so after updating, you might also have to update the settings on your game capture. The virtual camera supports loading background images, which can be useful for vtuber collabs over discord calls, by setting a unicolored background. The settings file is called settings.

.

The T pose needs to follow these specifications:. VRM conversion is a two step process. If no such prompt appears and the installation fails, starting VSeeFace with administrator permissions may fix this, but it is not generally recommended. A model exported straight from VRoid with the hair meshes combined will probably still have a separate material for each strand of hair. Try setting the camera settings on the VSeeFace starting screen to default settings. Reimport your VRM into Unity and check that your blendshapes are there. After this, a second window should open, showing the image captured by your camera. If you would like to disable the webcam image display, you can change -v 3 to -v 0. If humanoid eye bones are assigned in Unity, VSeeFace will directly use these for gaze tracking. Downgrading to OBS

Absolutely with you it agree. It is excellent idea. I support you.

You are not right. I am assured. Write to me in PM, we will talk.