Withcolumn in pyspark

It is a DataFrame transformation operation, meaning it returns a new DataFrame with the specified changes, without altering the original DataFrame.

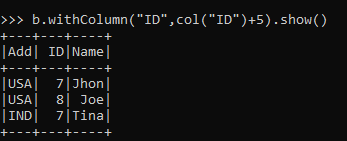

PySpark withColumn is a transformation function of DataFrame which is used to change the value, convert the datatype of an existing column, create a new column, and many more. In order to change data type , you would also need to use cast function along with withColumn. The below statement changes the datatype from String to Integer for the salary column. PySpark withColumn function of DataFrame can also be used to change the value of an existing column. In order to change the value, pass an existing column name as a first argument and a value to be assigned as a second argument to the withColumn function. Note that the second argument should be Column type. In order to create a new column, pass the column name you wanted to the first argument of withColumn transformation function.

Withcolumn in pyspark

Project Library. Project Path. In PySpark, the withColumn function is widely used and defined as the transformation function of the DataFrame which is further used to change the value, convert the datatype of an existing column, create the new column etc. The PySpark withColumn on the DataFrame, the casting or changing the data type of the column can be done using the cast function. The PySpark withColumn function of DataFrame can also be used to change the value of an existing column by passing an existing column name as the first argument and the value to be assigned as the second argument to the withColumn function and the second argument should be the Column type. By passing the column name to the first argument of withColumn transformation function, a new column can be created. It was developed by The Apache Software Foundation. It is the immutable distributed collection of objects. In RDD, each dataset is divided into logical partitions which may be computed on different nodes of the cluster. The RDDs concept was launched in the year The Dataset is defined as a data structure in the SparkSQL that is strongly typed and is a map to the relational schema. It represents the structured queries with encoders and is an extension to dataframe API. Spark Dataset provides both the type safety and object-oriented programming interface. The Datasets concept was launched in the year

In RDD, each dataset is divided into logical partitions which may be computed on different nodes of the cluster.

Returns a new DataFrame by adding multiple columns or replacing the existing columns that have the same names. The colsMap is a map of column name and column, the column must only refer to attributes supplied by this Dataset. It is an error to add columns that refer to some other Dataset. New in version 3. Currently, only a single map is supported. SparkSession pyspark.

PySpark withColumn is a transformation function of DataFrame which is used to change the value, convert the datatype of an existing column, create a new column, and many more. In order to change data type , you would also need to use cast function along with withColumn. The below statement changes the datatype from String to Integer for the salary column. PySpark withColumn function of DataFrame can also be used to change the value of an existing column. In order to change the value, pass an existing column name as a first argument and a value to be assigned as a second argument to the withColumn function. Note that the second argument should be Column type.

Withcolumn in pyspark

Spark withColumn is a DataFrame function that is used to add a new column to DataFrame, change the value of an existing column, convert the datatype of a column , derive a new column from an existing column, on this post, I will walk you through commonly used DataFrame column operations with Scala examples. Spark withColumn is a transformation function of DataFrame that is used to manipulate the column values of all rows or selected rows on DataFrame. Spark withColumn method introduces a projection internally.

Extract audio from video handbrake

Orthogonal and Ortrhonormal Matrix Enhance the article with your expertise. Applied Deep Learning with PyTorch This article is being improved by another user right now. Admission Experiences. Follow Naveen LinkedIn and Medium. MICE imputation 8. Enter your website URL optional. How to reduce the memory size of Pandas Data frame 5. What Users are saying.. In order to change the value, pass an existing column name as a first argument and a value to be assigned as a second argument to the withColumn function. Share your suggestions to enhance the article. Subscribe to Machine Learning Plus for high value data science content.

PySpark returns a new Dataframe with updated values. I will explain how to update or change the DataFrame column using Python examples in this article. Note: The column expression must be an expression of the same DataFrame.

Previous How to verify Pyspark dataframe column type? The below statement changes the datatype from String to Integer for the salary column. Create Improvement. UserDefinedFunction pyspark. In order to change the value, pass an existing column name as a first argument and a value to be assigned as a second argument to the withColumn function. T pyspark. Request A Call Back. PySpark withColumn function of DataFrame can also be used to change the value of an existing column. Receive updates on WhatsApp. How to reduce the memory size of Pandas Data frame 5. Types of Tensors Statistics Cheat Sheet. How to select only rows with max value on a column? StreamingQueryManager pyspark.

Very valuable piece

Excellent phrase and it is duly