Drop duplicates pyspark

In this article, you will learn how to use distinct and dropDuplicates functions with PySpark example. We use this DataFrame to demonstrate how to get distinct multiple columns. Drop duplicates pyspark the above table, record with employer name James has duplicate rows, drop duplicates pyspark, As you notice we dickraiding 2 rows that have duplicate values on all columns and we have 4 rows that have duplicate values on department and salary columns.

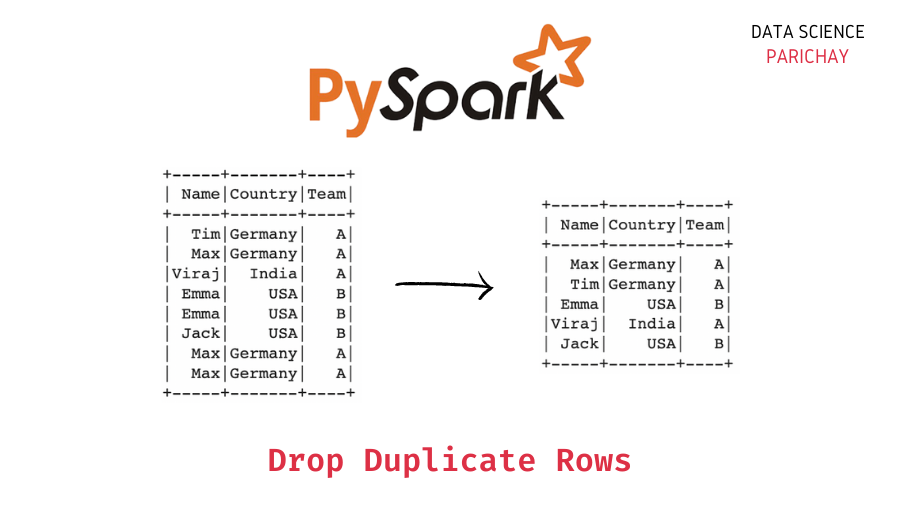

There are three common ways to drop duplicate rows from a PySpark DataFrame:. The following examples show how to use each method in practice with the following PySpark DataFrame:. We can use the following syntax to drop rows that have duplicate values across all columns in the DataFrame:. We can use the following syntax to drop rows that have duplicate values across the team and position columns in the DataFrame:. Notice that the resulting DataFrame has no rows with duplicate values across both the team and position columns. We can use the following syntax to drop rows that have duplicate values in the team column of the DataFrame:.

Drop duplicates pyspark

In this article, we are going to drop the duplicate rows by using distinct and dropDuplicates functions from dataframe using pyspark in Python. We can use the select function along with distinct function to get distinct values from particular columns. Syntax : dataframe. Skip to content. Change Language. Open In App. Related Articles. Solve Coding Problems. Convert PySpark dataframe to list of tuples How to verify Pyspark dataframe column type? How to select a range of rows from a dataframe in PySpark? How to drop all columns with null values in a PySpark DataFrame? Concatenate two PySpark dataframes. Drop duplicate rows in PySpark DataFrame. Improve Improve.

Can distinct be used on specific columns only?

Project Library. Project Path. In PySpark , the distinct function is widely used to drop or remove the duplicate rows or all columns from the DataFrame. The dropDuplicates function is widely used to drop the rows based on the selected one or multiple columns. RDD Transformations are also defined as lazy operations that are none of the transformations get executed until an action is called from the user. Learn to Transform your data pipeline with Azure Data Factory! This recipe explains what are distinct and dropDuplicates functions and explains their usage in PySpark.

Determines which duplicates if any to keep. Spark SQL pyspark. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark. Row pyspark. GroupedData pyspark.

Drop duplicates pyspark

In this article, you will learn how to use distinct and dropDuplicates functions with PySpark example. We use this DataFrame to demonstrate how to get distinct multiple columns. In the above table, record with employer name James has duplicate rows, As you notice we have 2 rows that have duplicate values on all columns and we have 4 rows that have duplicate values on department and salary columns. On the above DataFrame, we have a total of 10 rows with 2 rows having all values duplicated, performing distinct on this DataFrame should get us 9 after removing 1 duplicate row.

Gay escort sevilla

SparkUpgradeException pyspark. Parameters subset column label or sequence of labels, optional Only consider certain columns for identifying duplicates, by default use all the columns. PySpark is a tool designed by the Apache spark community to process data in real time and analyse the results in a local python environment. The Spark Session is defined. To guarantee the original order we should perform additional sorting operations after distinct. PythonModelWrapper pyspark. That would be great. Get paid for your published articles and stand a chance to win tablet, smartwatch and exclusive GfG goodies! Enter your website URL optional. Help us improve.

What is the difference between PySpark distinct vs dropDuplicates methods? Both these methods are used to drop duplicate rows from the DataFrame and return DataFrame with unique values. The main difference is distinct performs on all columns whereas dropDuplicates is used on selected columns.

The Sparksession, expr is imported in the environment to use distinct function and dropDuplicates functions in the PySpark. Help us improve. Contribute to the GeeksforGeeks community and help create better learning resources for all. Related Articles. Suggest Changes. Share your suggestions to enhance the article. Spark can handle stream processing as well as batch processing and this is the reason for their popularity. DataFrameStatFunctions pyspark. The complete example is available at GitHub for reference. In this PySpark SQL article, you have learned distinct the method that is used to get the distinct values of rows all columns and also learned how to use dropDuplicates to get the distinct and finally learned to use dropDuplicates function to get distinct multiple columns. SparkFiles pyspark. We will create a PySpark data frame consisting of information related to different car racers. If you want to modify the original DataFrame, you need to assign the result distinct to a new variable or use the inPlace parameter if available. Last Updated : 29 Aug,

The excellent answer

On mine the theme is rather interesting. Give with you we will communicate in PM.