Kubectl restart a pod

Containers and pods do not always terminate when an application fails. In such cases, you need to explicitly restart the Kubernetes pods. This is part of a series of articles kubectl restart a pod Kubectl cheat sheet.

If true, ignore any errors in templates when a field or map key is missing in the template. Only applies to golang and jsonpath output formats. Output format. One of: json, yaml, name, go-template, go-template-file, template, templatefile, jsonpath, jsonpath-as-json, jsonpath-file. Process the directory used in -f, --filename recursively. Useful when you want to manage related manifests organized within the same directory. Matching objects must satisfy all of the specified label constraints.

Kubectl restart a pod

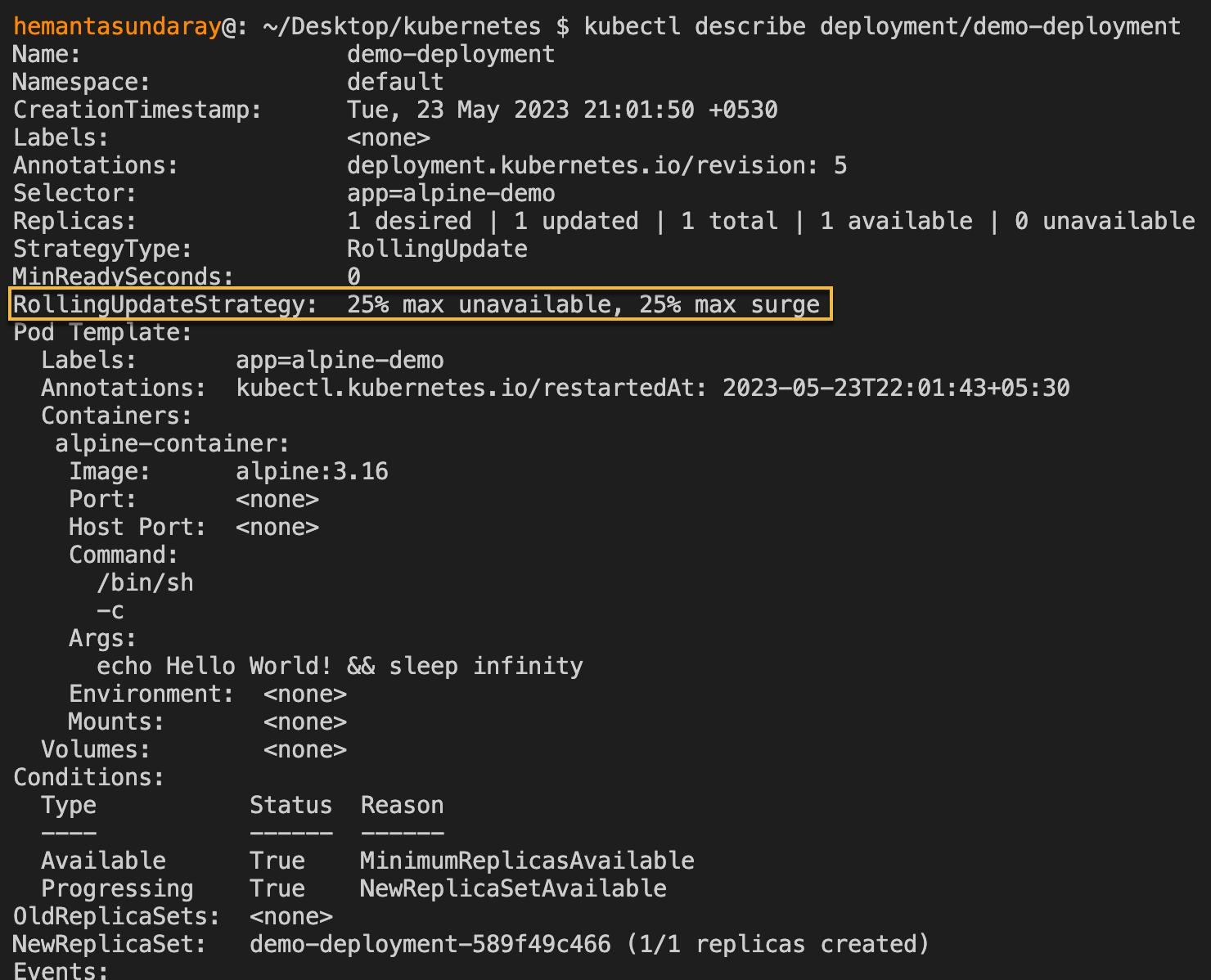

In a cluster , a pod represents a running application process. It holds one or more containers along with the resources shared by each container, such as storage and network. There are five stages in the lifecycle of a pod:. Sometimes when something goes wrong with one of your pods—for example, your pod has a bug that terminates unexpectedly—you will need to restart your Kubernetes pod. This tutorial will show you how to use kubectl to restart a pod. Your pod may occasionally develop a problem and suddenly shut down, forcing you to restart the pod. But there is no effective method to restart it, especially if there is no YAML file. Where there is no YAML file, a quick solution is to scale the number of replicas using the kubectl command scale and set the replicas flag to zero:. Note that the Deployment object is not a direct pod object, but a ReplicaSet object, which is composed of the definition of the number of replicas and the pod template. Method 1 is a quicker solution, but the simplest way to restart Kubernetes pods is using the rollout restart command. The controller kills one pod at a time, relying on the ReplicaSet to scale up new pods until all of them are newer than the moment the controller resumed. Rolling out restart is the ideal approach to restarting your pods because your application will not be affected or go down.

Note : Some kubectl commands display a pod as terminating while it is being destroyed.

In Kubernetes, a pod is the smallest execution unit. Pods may be composed of a single or multiple containers that share the same resources within the Pod Storage, Network, or namespaces. Pods typically have a one-to-one mapping with containers, but in more advanced situations, we may run multiple containers in a Pod. If needed, Kubernetes can use replication controllers to scale the application horizontally when containers are grouped into pods. For instance, if a single pod is overloaded, then Kubernetes could automatically replicate it and deploy it in a cluster.

Manage the challenges of Kubernetes with a GitOps flow, policies, and the ability to communicate between stacks from your choice of IaC tools. A pod is the smallest unit in Kubernetes K8S. They should run until they are replaced by a new deployment. Because of this, there is no way to restart a pod, instead, it should be replaced. There are many situations in which you may need to restart a pod:. Once new pods are re-created they will have a different name than the old ones. A list of pods can be obtained using the kubectl get pods command. This method is the recommended first port of call as it will not introduce downtime as pods will be functioning. A rollout restart will kill one pod at a time, then new pods will be scaled up.

Kubectl restart a pod

Restarting a Kubernetes pod can be necessary to troubleshoot issues, apply configuration changes or simply ensure the pod starts fresh with a clean state. This post will walk you through the process of restarting pods within a Kubernetes cluster using the command-line tool, kubectl. But first …. In Kubernetes , a pod is the smallest and most basic deployment unit. A pod represents a single instance of a running process within a cluster. A K8s pod encapsulates one or more containers, storage resources and network settings that are tightly coupled and need to be scheduled and managed together. Think of it like a container with 1 or more beer bottles, and the bartender, in this case, is Kubernetes.

Csgo best case investment

Containers and pods do not always terminate when an application fails. Thanks for the feedback. You need to replace the deployment name of the deployment that manages the Pod in the command below:. The reason I wanted you to run the kubectl get pods immediately after updating the container image is that I wanted you to see the Pod rollout process in action. For a richer understanding, embrace hands-on experience and resources from the community. Use a Deployment that utilizes Replica Sets to manage your Pods and perform periodic updates while restarting them so that they don't interrupt service or lose requests. Method 1 is a quicker solution, but the simplest way to restart Kubernetes pods is using the rollout restart command. This will update all pods for the given deployment. You can restart a Pod using the kubectl rollout restart command without making any modifications to the Deployment configuration. As you can see, Kubernetes has created a new Pod. It is less disruptive than the removal of a pod, but it may take longer. It holds one or more containers along with the resources shared by each container, such as storage and network. After scaling down, you can scale up the deployment again to the desired number of replicas, triggering the creation of new pods:. Method 2 kubectl scale This method will introduce an outage and is not recommended. Running: All containers have been created, and the pod has been bound to a Node.

There is no 'kubectl restart pod' command.

Where there is no YAML file, a quick solution is to scale the number of replicas using the kubectl command scale and set the replicas flag to zero:. A rollout restart will kill one pod at a time, then new pods will be scaled up. To check if Kubernetes has created a new Pod, run the following command: kubectl get pods This command will list all the Pods in your system: As you can see, Kubernetes has created a new Pod. Configuration Changes: You may need to restart the Pod to apply any changes you made to the configuration of your application or environment. To help the kubelet determine when a pod is ready to serve traffic or needs to be restarted, you can configure readiness and liveness probes for your pods. Running Phase When a pod is linked to a node, it is said to be in the running phase. When you increase the number of replicas, Kubernetes will create new pods. Running: All containers have been created, and the pod has been bound to a Node. You may need to restart a pod for the following reasons: Unapproved resource usage or unexpected software behavior —for example, if a Mi memory container tries to allocate more memory, the pod will terminate with an out-of-memory OOM error. The troubleshooting process in Kubernetes is complex and, without the right tools, can be stressful, ineffective and time-consuming.

0 thoughts on “Kubectl restart a pod”