Spark dataframe

Spark has an easy-to-use API for handling structured and unstructured data called Dataframe. Every DataFrame has a blueprint called a Schema, spark dataframe.

Send us feedback. This tutorial shows you how to load and transform U. By the end of this tutorial, you will understand what a DataFrame is and be familiar with the following tasks:. Create a DataFrame with Python. View and interact with a DataFrame.

Spark dataframe

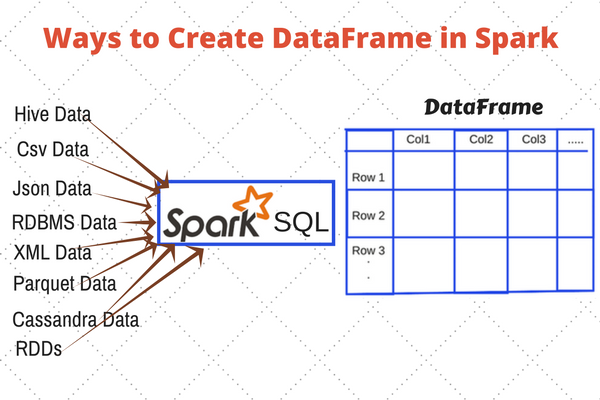

Spark SQL is a Spark module for structured data processing. Internally, Spark SQL uses this extra information to perform extra optimizations. This unification means that developers can easily switch back and forth between different APIs based on which provides the most natural way to express a given transformation. All of the examples on this page use sample data included in the Spark distribution and can be run in the spark-shell , pyspark shell, or sparkR shell. Spark SQL can also be used to read data from an existing Hive installation. For more on how to configure this feature, please refer to the Hive Tables section. A Dataset is a distributed collection of data. Dataset is a new interface added in Spark 1. A Dataset can be constructed from JVM objects and then manipulated using functional transformations map , flatMap , filter , etc. Python does not have the support for the Dataset API. The case for R is similar. A DataFrame is a Dataset organized into named columns. DataFrames can be constructed from a wide array of sources such as: structured data files, tables in Hive, external databases, or existing RDDs.

DataFrames loaded from any data source type can be converted into other types using this syntax. Documentation archive.

Spark SQL is a Spark module for structured data processing. Internally, Spark SQL uses this extra information to perform extra optimizations. This unification means that developers can easily switch back and forth between different APIs based on which provides the most natural way to express a given transformation. All of the examples on this page use sample data included in the Spark distribution and can be run in the spark-shell , pyspark shell, or sparkR shell. Spark SQL can also be used to read data from an existing Hive installation. For more on how to configure this feature, please refer to the Hive Tables section.

Aggregate on the entire DataFrame without groups shorthand for df. Returns a new DataFrame with an alias set. Calculates the approximate quantiles of numerical columns of a DataFrame. Returns a checkpointed version of this DataFrame. Returns a new DataFrame that has exactly numPartitions partitions. Selects column based on the column name specified as a regex and returns it as Column. Returns all the records as a list of Row. Calculates the correlation of two columns of a DataFrame as a double value.

Spark dataframe

Apache Spark DataFrame is a distributed collection of data organized into named columns, similar to a table in a relational database. It offers powerful features for processing structured and semi-structured data efficiently in a distributed manner. In this comprehensive guide, we'll explore everything you need to know about Spark DataFrame, from its basic concepts to advanced operations. Spark DataFrame is a distributed collection of data organized into named columns, similar to a table in a relational database. It is designed to handle large-scale structured data processing tasks efficiently in distributed computing environments. Schema : The schema defines the structure of the DataFrame, including the names and data types of its columns. It acts as a blueprint for organizing the data.

I want something just like this

These DataFrames can be created from various sources, such as Hive tables, log tables, external databases, or the existing RDDs. Configures the maximum size in bytes for a table that will be broadcast to all worker nodes when performing a join. You have permission to create compute enabled with Unity Catalog. Step 3: View and interact with your DataFrame View and interact with your city population DataFrames using the following methods. The estimated cost to open a file, measured by the number of bytes could be scanned in the same time. A DataFrame can be operated on using relational transformations and can also be used to create a temporary view. Larger batch sizes can improve memory utilization and compression, but risk OOMs when caching data. The second method for creating DataFrame is through programmatic interface that allows you to construct a schema and then apply it to an existing RDD. The names of the arguments to the case class are read using reflection and become the names of the columns. For example, we can store all our previously used population data into a partitioned table using the following directory structure, with two extra columns, gender and country as partitioning columns:. It defaults to false. See PyArrow installation for details. This can lead to out of memory exceptons, especially if the group sizes are skewed. UserDefinedAggregateFunction import org. When Hive metastore Parquet table conversion is enabled, metadata of those converted tables are also cached.

There are two ways in which a big data engineer can transform files: Spark dataframe methods or Spark SQL functions. I like the Spark SQL syntax since it is more popular than dataframe methods. How do we manipulate numbers using Spark SQL?

Note that schema inference can be a very time consuming operation for tables with thousands of partitions. From the sidebar on the homepage, you access Databricks entities: the workspace browser, catalog, explorer, workflows, and compute. Starting from Spark 1. They store the data as a collection of Java objects. Serializable ; import org. This can lead to out of memory exceptons, especially if the group sizes are skewed. Career Transition. UserDefinedAggregateFunction import org. To view the U. Convert between PySpark and pandas DataFrames. This functionality should be preferred over using JdbcRDD. DataFrames provide a domain-specific language for structured data manipulation in Scala , Java , Python and R. Send us feedback.

I am sorry, I can help nothing. But it is assured, that you will find the correct decision. Do not despair.

I think, that you are not right. Write to me in PM, we will communicate.